Introduction

Emotions instinctually influence our behaviors and every day decisions. As such, emotions are a great way to understand users’ interactions with products and it helps to inform our designs. But how can we measure emotion?

As the greatest expression of emotion can be seen on our faces, Facial Expression Analysis is a great method of measuring emotion and engagement.

Facial Action Coding System

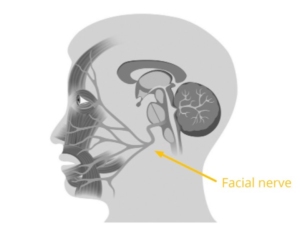

The face has 43 facial muscles. One single facial nerve can be attributed to triggering almost almost all of our facial muscles. The other facial nerve, Oculomotor nerve, controls the upper eyelid and pupils.

The face has 43 facial muscles. One single facial nerve can be attributed to triggering almost almost all of our facial muscles. The other facial nerve, Oculomotor nerve, controls the upper eyelid and pupils.

With these 43 facial muscles, our faces show varying emotions including 7 core emotions which are Anger, Contempt, Disgust, Fear, Joy, Sadness and Surprise. But how do we distinguish between the different emotions?

A system for analyzing facial motion units was developed by a Swedish anatomist, Carl-Herman Hjortsjö, then adopted by psychologists Paul Ekman and Wallace Frieseni into the Facial Action Coding System (FACS).

The Facial Action Coding System is comprised of:

- 46 Main Action Units in which each facial motion is broken down from the eyebrows, eyes, nose, mouth, and chin to note things like raised eyebrows, squinting and smiling.

- Eight Head Movement Action Units capture head tilts or movement of the head forward or back.

- Four Eye Movement Action Units note the eyes moving to the left or right, or up or down.

Emotions are then defined using correlating action units. For example, the combination of Action units for the emotion Happiness / Joy includes:

| Happiness / Joy | |

| Action Unit 6 Cheek Raiser |

Action Unit 12 Lip Corner Puller |

|

|

Some other combinations are more complex, like Anger which is comprised of:

| Anger | |||

| Action Unit 4 Brow Lowerer |

Action Unit 5 Upper Lid Raiser |

Action Unit 7 Lid Tightener |

Action Unit 23 Lip Tightener |

|

|

|

|

Some Action Units are used in multiple expressions of emotions like Action Unit #5 Upper Lid Raiser which is used in Surprise, Fear, Anger.

| Surprise | Fear | Anger |

| Action Unit 5 Upper Lid Raiser |

||

|

||

Methods of Measurement

While facial expressions are a great indicator of emotion, the average duration of facial expression occurs between 0.67 – 4 seconds thus making it challenging to accurately map each emotion at the moment. The following three main methods of facial expression analysis address these issues, each with their own advantages and disadvantages.

Facial electromyography (fEMG)

Facial electromyography (fEMG) is the oldest of the three methods. It involves recording the electrical activity from facial muscles using electrodes and specialized software. The resulting data can provide information on facial muscle movements that are impossible to visually detect. Recordings are limited however to a finite number of electrodes that can be placed on the face. Further still, the application of the electrodes also requires some knowledge about both the facial musculature, and also how to correctly apply the electrodes.

Video Analysis & Manual Coding of FACs

Video analysis and manual coding of the Facial Action Coding System is a non-intrusive method of collecting facial expressions. One major disadvantage however, is that trained experts are needed to properly score the various Action Units. Additionally, it is very time consuming as a video recording of the user must be studied frame-by-frame, making the coding very time-intensive and expensive.

For example, it can take a well-trained FACS coder about 100 minutes to code 1 minute of video data depending on how dense and complex the facial actions are.

Automatic Facial Expression Analysis

Compared to the above methods, automatic facial expression analysis doesn’t require any specialized high-class equipment, electrodes, or cables, just a basic web-camera and specialized software.

The software first identifies the face, then detects facial landmarks like the eyes, brows, tip of the nose, mouth and corners of the mouth using computer vision algorithms. A simplified model of the face is then adjusted in the software to match the user’s face. The software can then detect any movements in the face, compare it against a database to then accurately record the emotion being expressed.

Various software currently exist to automatically analyze facial expressions, including Affectiva which is noted to have the largest dataset of any facial expression analysis software company, with six million faces analyzed.

Conclusion

There are various methods to analyze facial expressions each with it’s benefits and drawbacks. Deciding on which to use will greatly depend on the resources and time you have available.

https://imotions.com/blog/collect-and-analyze-facial-expressions/

https://blog.affectiva.com/the-worlds-largest-emotion-database-5.3-million-faces-and-counting

http://uxpamagazine.org/the-future-of-ux-research/