Image Credit: MIT News

Think about the apps, websites, and other digital products that we encounter everyday. How do we feel when using them? Have we been pleased by some of them while annoyed by others? Are we aware of our reactions and behaviors when such feelings occur? Think of the times when we have the urge to abandon a website or uninstall an app out of frustration, and we will understand how much emotion weighs in those interactions. Centering on users affective responses to digital products, this article will provide some insights into the technologies used to detect emotions, as well as the pros and cons of such approach.

Emotional Technologies

The user experience field has been striving to find valid measurement of users’ feelings to a product, because a successful usability is necessary, yet not sufficient, to sustain continuing use if it fails to elicit positive emotions from users. Compared to a self-reported technique which could be subjective to bias and errors, using technologies that analyze physical responses is a more reliable way to objectively reflect how users really feel (Reynolds, 2017).

Understanding emotions requires understanding of human physiological metrics, including facial expressions, body language, tone of voice, etc., which could be measured and analyzed by a variety of sensing technologies. The main methods used are: motion capture systems or accelerometer sensors that tracks one’s body movements; biosensor placed on palms or fingers to measure increase in sweat; and cameras for measuring facial expressions (Preece, Sharp, & Rogers, 2015).

Facial Coding Example: Affdex

Image Credit: Affectiva

In recent years, advanced softwares and algorithms have been developed to detect facial expressions and analyze different emotions. An leading example of facial recognition or facial coding tools would be the Affdex emotion analytics and insights software from Affectiva. Captured through a webcam, users reactions to digital contents is analyzed using Affdex’s machine-learning algorithms, and classified into six categories (Preece et al., 2015). Below is a list of Affdex’s fundamental emotions and a simplified procedure of how Affdex works.

Affdex 6 Fundamental Emotions: 1) Happiness 2) Sadness 3) Disgust 4) Fear 5) Surprise 6) Anger (Preece et al., 2015)

Affdex cloud-based face video process procedures: 1) Detect & Extract Features:Face Detection. Image Credit: Affectiva - Locate a face, and extract the key feature points on the face - Identify 3 Regions of interest: mouth, nose and upper half of face 2) Classify Emotional States:

Analyze key regions Image Credit: Affectiva - Assess movements, shape and texture of the entire face at pixel level - Take extracted facial features and classify them into emotional states - Affdex data is received at 14 frames per second - Once the facial features have been categorized, the resulting emotions are assigned numeric values for each frame 3)Assess & Report Emotion Response:

Affdex dashboard. Image Credit: Affectiva - Infer emotional and mental states to feed analytical models - The Affdex dashboard visualizes a time series curve for each emotion metrics that aggregates user's emotional experiences (Affdex, n.d. p.4-5)

Pros & Cons

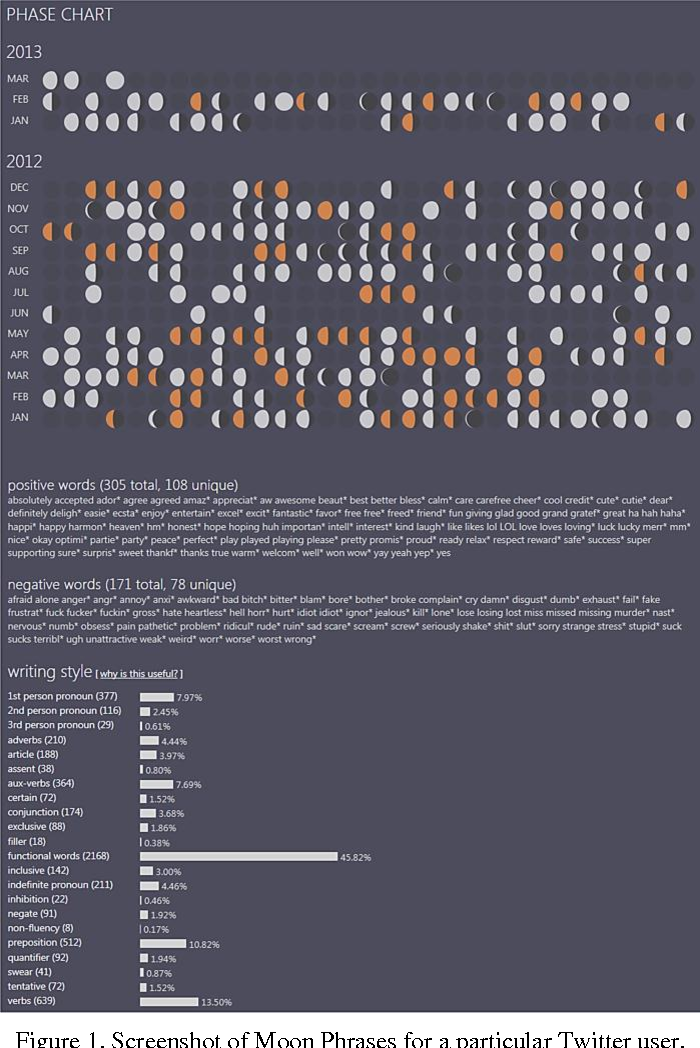

Emotional Technologies provide honest feedback to designers, as it measures people’s physical responses on a non-conscious level. In addition to informing designs, further applications could also help people to self assess their emotional states. For example, an app called “Moon Phrases” was developed to analyze users’ expressions on social media (E.g. Tweets, Facebook posts) through the choice and frequency of words, phrases, hashtags, emoticons, etc. This measurement of positive or negative emotions, in turn, is visualized using a series of moon icons representing different phases (Preece et al., 2015). Reflecting on the patterns arise from the history of moons, users can be conscious of the fluctuations in their mood and perhaps be more motivated to improve their emotional well-being.

Image Credit: Semantic Scholar

On the down side, how information as such and within what context it should be used might raise considerable concerns. Question is: when technologies are capable of comprehending people’s emotion – when knowledge of one’s emotion is not only tailored towards design of an app or a website, but could also be exploited for massive digital contents such as ads, movies, news, merchants, etc, that properly match your mood (Preece et al., 2015) – will you think it is creepy or intrusive?

Limitations

While basic emotions such as the ones classified by Affdex might be explicit enough to identified and measured, there are many other emotions, or perhaps a mixture of different states, that are more nuanced and thus not always recognizable. Also it could be tricky for researchers or designers to tell exactly where that affective response is coming from. For example, if the facial coding produced a result of confusion, is it due to unclear signifier as of what to do next? Or a lack of feedback that informs their past action? Another concern raised by researchers is such measurements’ capability to be generalized across different countries or ethnic groups, because the expression and perception of emotions might vary depending on different cultures. (Ko, 2017)

Conclusion

In addition to the technologies mentioned above, eye-tracking, speech, words/phrases (E.g. Tweets, Facebook) analysis, EG wearables (E.g. headsets that picks up electric signals from the brain) are widely applied to reveal users mental state. It is recommended to adapt a combination of methodologies for more useful insights. There is not yet a scientific way to precisely measure emotion (and I don’t think there will ever be, for they could as complex, nuanced and ephemeral as you could imagine), but these knowledge are relatively reliable to inform the design of user experience.

References

Affdex (n.d.). Exploring the emotion classifiers behind Affdex facial coding. Retrieved October 18, from https://studylib.net/doc/13073324/white-paper-exploring-the-emotion-classifiers-behind-affd…#

Ko, D. (2017). Measuring emotions to improve UX. Retrieved October 18, from https://medium.com/paloit/measuring-emotions-to-improve-ux-e0c16dd5584a

Preece, J., Sharp, H., & Rogers, Y. (2015). Interaction design (4th ed.). United Kingdom: John Wiley & Sons Ltd.

Reynolds, A. (2017). How to measure emotion in design. Retrieved October 18, from https://uxfactor.wordpress.com/2017/10/14/how-to-measure-emotion-in-design/