Brandon Whightsel is Design Director at Yahoo Finance, where he has worked for six years. Prior to that, he worked at the Wall Street Journal, Reuters, AOL, and CBS News. We sat down to talk about how UX research and usability evaluation are integrated in his team’s work. The following transcript has been edited for clarity.

WM: Can you tell me a little bit about your job?

BW: I lead a team of nine designers, which has grown a lot in the last couple years. And that team designs all the products for Yahoo Finance on web and apps. We have an Android app, an iOS app, an iPad app, and of course web and mobile web products. Then also in the past couple years we’ve taken over TechCrunch and Engadget and we’re also working on a new personal finance product that hasn’t been released yet. So lots of different brands as the team has grown.

The designers tend to organically be aligned with platforms, so there’s someone who’s an iOS designer, someone who’s the Android designer, someone who’s the web designer, and so on. One thing we struggle to do is break down the silos and get people collaborating more across platforms because we want our site and our apps and our experience in general to be consistent as much as possible where it’s appropriate across platforms, and it’s hard to do when people are really siloed, things tend to diverge naturally. It also helps us internally to have consistency because it’s more efficient and scalable and we’re not solving the same problem in different ways. It takes a lot of effort to have a unified approach across everything.

We do a ton of usability research – that’s one of my favorite things about working at Yahoo. The way we get that done is there’s a UX research (UXR) team which is horizontal, and that means they serve all the brands, and they’ll do research for Finance and Sports and Yahoo Mail and the Yahoo homepage, what we call the different verticals or brands within our organization.

WM: So that’s broader than just your team?

BW: It is, but I have two people dedicated to my team. So they’re in a horizontal team, they don’t report to me, but they come to our design meetings every week and we have a weekly UXR readout with them, so it’s almost like they’re on my design team – they’re really tightly integrated.

We do a ton of user testing, and we try to do it on a regular basis, every two weeks. We’re always designing something and there’s always something that we want to show to users and put in front of them. We’re very nimble about it. We meet with our UXR teammate a couple times a week, and we’re always planning maybe one or two weeks ahead who’s committing to having a prototype ready for user testing, or sometimes we’re testing the live product if it’s not a prototype. Sometimes in the past we’ve done printouts to show people, but it usually ends up being an InVision prototype or something like that. We’ll know a couple weeks in advance what we’re going to test. The designer works with the UXR teammate to work out a plan of what we’re going to be testing and have that prototype ready.

Then the UXR teammate facilitates the test, but we can all observe or join. They work out of Sunnyvale so a lot of the testing happens there. They have a usability lab with a two way mirror so we’ll observe in real time if we’re there, but they will stream it. And sometimes we do remote testing where the user is at their home in Florida or something and we’re in California and New York observing.

WM: Is it mostly in person testing, and is that the ideal? Or do you have a set of different methods that you use in combination?

BW: We use several methods. The main method is one on one classic usability testing, where our UXR teammate is facilitating and working with one user and we want to see how a user interacts with some feature or some experience or some aspect of the app, and we will have built a prototype and the facilitator will work with them one on one. That either happens in person or remotely. In the last year we’ve been doing that a lot more remotely, where it’s still one on one and the facilitator does the same method in real time, but happens to be remote from them, and he is streaming the test so the rest of us can watch them interact with the app in real time.

WM: So he’s moderating the test while it’s happening and communicating with them?

BW: Yes, and often with the designers that are working on the project, or anyone else that wants to join – we try to encourage product and engineering to join and sometimes they do – that way if any issues come up with the prototype or if we have any additional questions we can Slack him, and he is in real time facilitating and responding to our comments. Or if he has a question about the prototype if he gets stuck. It’s a team effort to do the usability, but he’s facilitating it, and that’s a huge benefit. I haven’t really tried to facilitate my own test, but I am suspicious that a designer wouldn’t really be able to do a good job of facilitating their own test because I think it takes a little bit of objectivity and a level of detachment, so it’s great to have a UXR person facilitate it for you.

WM: Do the UXR people tend to have backgrounds in design or UX research specifically?

BW: They’re not designers, I think they have UX research backgrounds.

So that was usability research, that’s our main method, where we really try to suss out some of the issues as we try to refine the app over time as we’re designing it. We also do surveys. We’ll either ask a series of questions of users about how they might use products or how they use products in the finance space in general.

And we’re always trying to come up with new methods or new ideas that we can adapt to whatever scenario we have. Recently we were curious about a few different visual design directions that we might go in, so we used SurveyMonkey to do a quantitative survey where we presented three distinctly different design directions for a product. This was more about visual design than usability, and we asked a series of about twenty multiple choice questions, and shared it with a thousand people across the country and targeted two specific demographics to see if our real target demographic was different from the general population. It was a fascinating test and gave us some great insights. It would be harder to do something like that for an interactive prototype, but for a flat visual design we really got strong preferences for one of our design directions.

WM: That’s really interesting. Can you give me an example of what sort of feature or product you would be testing? What’s the scope of a typical test?

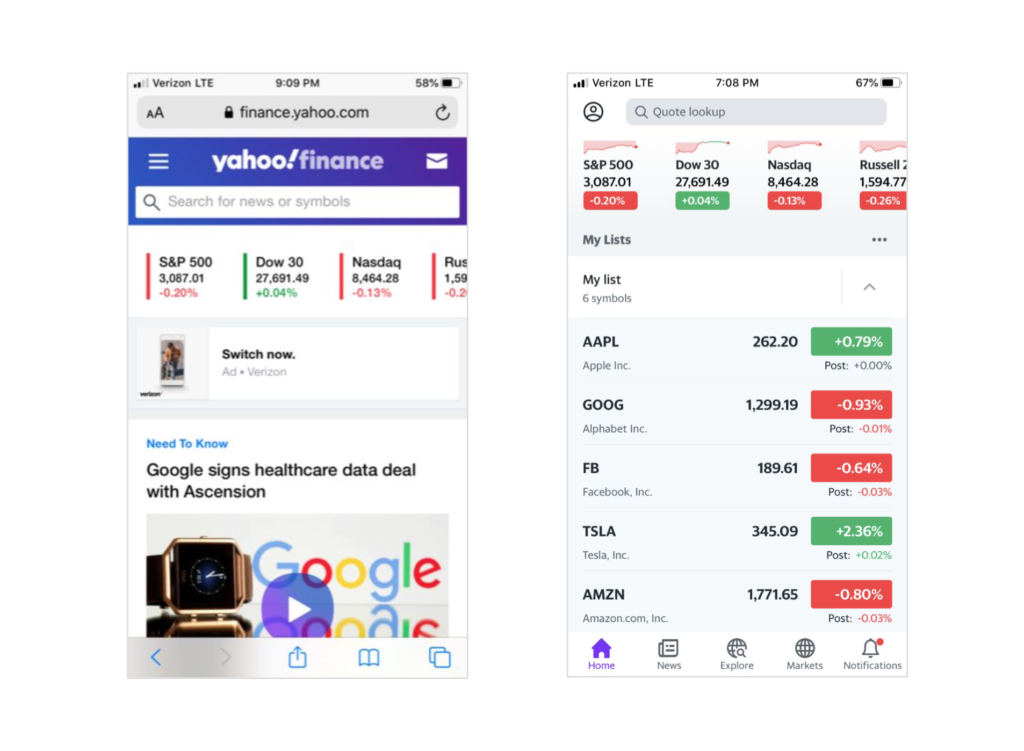

BW: The Yahoo Finance app is an app for investors, and it’s core functionality is users build “Watchlists” of ticker symbols they want to follow. We know from our metrics that once a person creates a Watchlist they become much more engaged in the app, and their news becomes more relevant and the whole experience gets better. So we’re always trying to figure out how to get people, especially new users, to create a watchlist. A designer had an idea recently of starting a user off with a sort of wizard in the experience of creating a new Watchlist. So the first time a user comes in it gives them a hint, and all they have to do is hit a few stars on symbols they like, or search for additional symbols they want and then there’s a big blue button that says “create my first list”, and then your list appears.

So we tested that this week, the designer created a pretty quick and easy InVision prototype and it took a user through the main flows. There were a couple aspects of it – they were on the homepage with sample symbols and they were also able to use search to search for other symbols. And even during designing the prototype and considering how the usability test was going to work, we thought of some additional flows. We ran that this week and learned some good things. Mostly it validated the idea, people really engaged, they knew what to do with the Watchlist. Some of the only real feedback we got was around some of the intro text at the top, which was confusing to people, because immediately they asked “what if I want more than one Watchlist?” or “what if I want to edit my Watchlist later?” They were a little hesitant to build a Watchlist because they thought they were really committing to something. So through that we learned to finesse the text at the top to let them know that it’s really easy to edit it later.

WM: Interesting. Do you frequently discover things that are surprising or get steered in new directions you hadn’t been considering?

BW: I get really suspicious if all that’s happening in a test is I’m validating my judgments or assumptions. For me, the best usability testing is where I get a bunch of surprises and get a to-do list of twenty things to fix or make better. Sometimes if we have a new designer join our team and they find a bunch of areas where the users struggled, they feel like they failed, but actually they hit the jackpot. Some of the worst tests are when the users blast through it and they don’t have any problems, and you don’t get any new ideas of things to fix.

In almost every test a user will give you feedback on something you weren’t even thinking about – something that you weren’t even testing but happens to be part of the prototype. So I encourage designers to go into the testing being really open minded and writing down everything they are observing and sharing that information with each other. We always debrief, and I always encourage designers to be involved in the testing as it’s happening in real time, because after the session, designers and the facilitator will get together and share notes, and usually through that conversation some new ideas will come up.

WM: It sounds like your UX research and usability evaluation is critical to your design process and you have a really built out system. In my experience, it’s hard to do that well, and especially hard when you have projects that are time bound and budget bound. Are those challenges you’ve faced, and was it an evolution to get to this point?

BW: I think it was an evolution. In my previous job at the Wall Street Journal, we did user research but it was done at the end of the project to validate the assumptions you’ve already made and everyone was hoping that there wouldn’t be any issues. And it was really expensive. We would do a big elaborate user test on an entire product after we’d been working on it for months, and it felt like going through the motions. And that’s not a knock on the Wall Street Journal, that’s just where user research was at the time. But the whole industry has evolved over the last six years.

When I came to Yahoo Finance, we had these resources and we started trying to figure out this process and method for what worked for our team. I always had a counterpart, someone on the user research team who wanted to work with me, and we developed this habit of testing early and often, and it became this great tool. And our partners in product and engineering recognized the value because ultimately it creates efficiency and it saves money, and engineers don’t end up building the wrong thing.

It also is a great tool for making some business decisions. Often when we’re working with the product team to figure out which direction a product or feature should go, user testing becomes a way to figure those things out without people having to use their guts and argue with each other. Usually it really helps change minds. Yahoo’s people react really well to metrics, so if you can put some research or metrics behind it’s hard to argue with that. It hasn’t been a struggle for a long time, because business understands the value of it. But it has evolved, we organically created the system of testing every two weeks and the methods we follow and the way we do it.

WM: That’s interesting and impressive. Do you have anything else you want to share, or things that you think young usability professionals should be keeping in mind?

BW: I’ve met designers in my organization whose business counterparts aren’t bought in to UXR and they can’t do a lot of it. It can be a struggle if people don’t think they can afford it and don’t think there’s time for it. So it’s helpful to be able to be influential in making the point that it saves everybody money and effort and time, and it doesn’t have to be a big investment. It just takes a little bit of will and people being willing to build some prototypes, but you can do it in a really scrappy way. Prototypes are how we communicate design ideas anyway, we almost never do flat screens. So it’s just one extra step – in addition to showing it to executives and product and engineering we also show it to some users. It just doesn’t take a lot of investment.

One of my mantras is that the answers to the questions are outside of the building. We’re too close to the product, we understand it too well, we have all of our baggage of previous struggles of other things we’ve built. You don’t really know until you show it to others and see how they react to it.

One thing we do struggle with is recruiting participants is really hard. One thing we’ve found especially in the Bay Area is there’s kind of a cohort of professional user testers, so you get some of the same people over and over, and sometimes you get people where you can tell that they don’t use the product that much but they are pretending that they are so they can come in and do the test. It feels like we’ve tapped out the audience in the Bay Area a bit. And that’s why we’ve been getting a lot of mileage out of the remote testing this year. The only thing with doing it completely remotely is of course the facilitator isn’t there to probe on an area that is particularly interesting. It’s really valuable if you have a question or want to dig into something while the test is happening to be able to do that.