Google lookout is an Android App designed for people who are visually impaired to easily identify their surroundings and get things done faster and more easily. This App uses AI to identify objects through the camera of a user’s phone. Google states that people who are visually impaired also prefer mastering their lives. This product helps them ‘seeing’ something by themselves and no need to trouble others. The latest version of the App was updated on August 11, 2020 with more than 10000 installs.

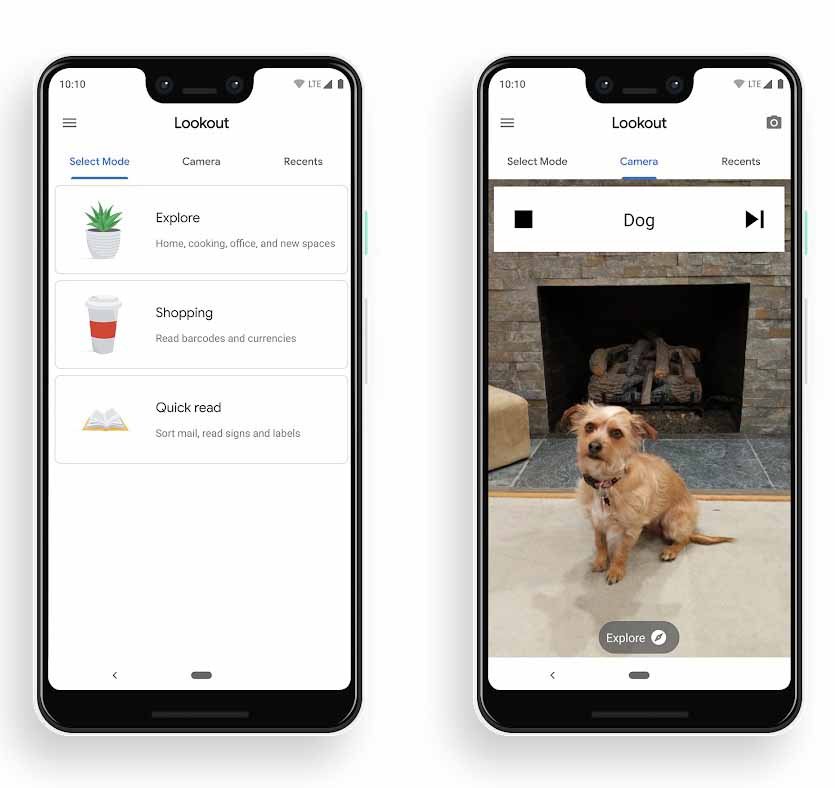

There are three features provided in this App: explore (home, cooking, office and new spaces), shopping (read barcodes and currencies) and quick read (sort mail, read signs and labels). However, for visually impaired users, I wonder if they can align the camera with the barcode. Thus, in the latest version, two new modes are developed for more convenience. One is Food Label mode, that users don’t need to scan barcodes but only open the mode to quickly get food label information. The other is called Scan Document Mode. Users can read a while page of text by uploading a snapshot of the document. These five modes do provide convenience for not only the visually impaired users but also users without permanent visual issue to improve efficiency of getting information. For instance, the environment is very dark while I want to get the instructions of an equipment, so the App can help.

After choosing a mode, the App will take you to the camera tab and automatically recognize things for you. A good design point is that if users don’t know which mode to choose, they can just change to the camera tab to get descriptions, which improves the usability of the App. In addition, the App tells you the results in voice and also points out the direction of the object, like ‘the door in 12 o’ clock’, which is considerate and friendly for visually impaired people.

As for how to use the App to discover things around, Google recommends that users wear their mobile phone around their neck or placed in the front a pocket of a shirt. I think the recommendations are good since it’s not convenient to keep holding the phone for some disabled people in some cases. For instance, users are going upstairs with heavy packages in both hands, in the meanwhile they want to figure out what’s rolling down the stairs, a cat or a bottle. So, Google is thoughtful to provide such a recommendation.

The App is a good example to show what is social model: disability is caused by poor design. By using the digital product, users who are visually impaired will find themselves not problems, but the lack of accessibility is a problem that causes them trouble. The product also touches on functional solutions model, which focuses more on how to overcome the limitations of disability, since all the features the App provides, and the voice response are originally designed to overcome the inconvenience caused by visual impairments.

Right now, the Lookout App can only be used on Android mobile phones, which is not accessible for iOS users or people who are using other devices. But downloading the App is free, which is more affordable than some assistive technology products designed for people with a blind like OrCam MyEye or IrisVision, the prices of which can exclude many users from obtaining the assistance.

Bibliography:

1. Lookout By Google – Apps on Google Play:

https://play.google.com/store/apps/details?id=com.google.android.apps.accessibility.reveal&hl=en_US

2. Google Lookout: App reads grocery labels for blind people:

https://www.bbc.com/news/technology-53753708

3. Google releases Lookout app that identifies objects for the visually impaired:

https://www.theverge.com/2019/3/13/18263426/google-lookout-ai-visually-impaired-blind-app-assistance