Seeing AI is an app developed by Microsoft to utilize AI for accessibility. The app features various modes to assist blind people such as barcode scanners, document readers, and experimental AI modes such as person recognition.

“Seeing AI is a free app that narrates the world around you. Designed for the blind and low vision community, this ongoing research project harnesses the power of AI to open up the visual world and describe nearby people, text and objects.”

– Microsoft, product description

This app aims to tackle the issue in the social model of accessibility by creating an accessible pathway between blind people and the world around them by utilizing a functional solutions approach. Within the app, much focus is provided on usability and accessibility. Each mode is accompanied with an explanation on its features and a corresponding video tutorial. However, it’s also important to note that Seeing AI is optimized for use with VoiceOver, Apple’s built-in screen reader. This integration means that the discoverability and accessibility of this app is inherently dependent on Apple’s own accessibility for iOS. Many of the modes, particularly the various text recognition modes, are similar to well-known technologies such as screen readers, so I wanted to take the time to evaluate some of the other experimental modes this app offers.

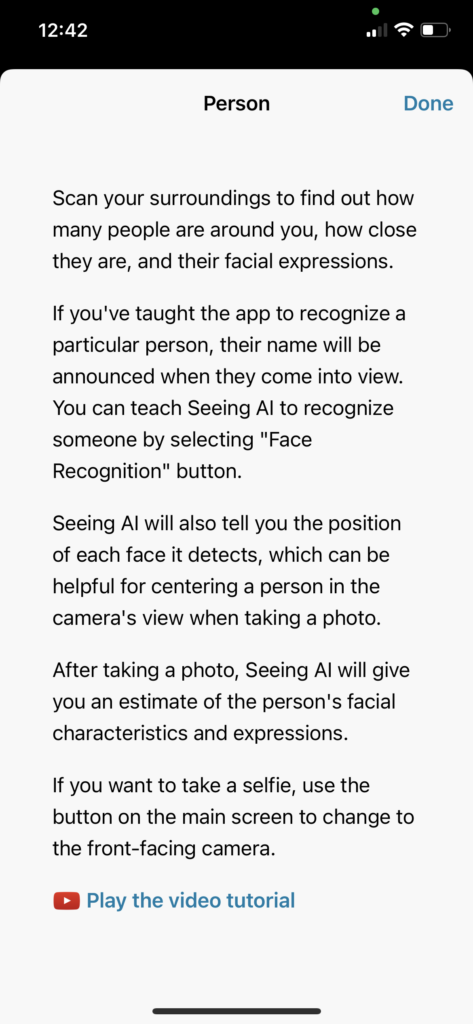

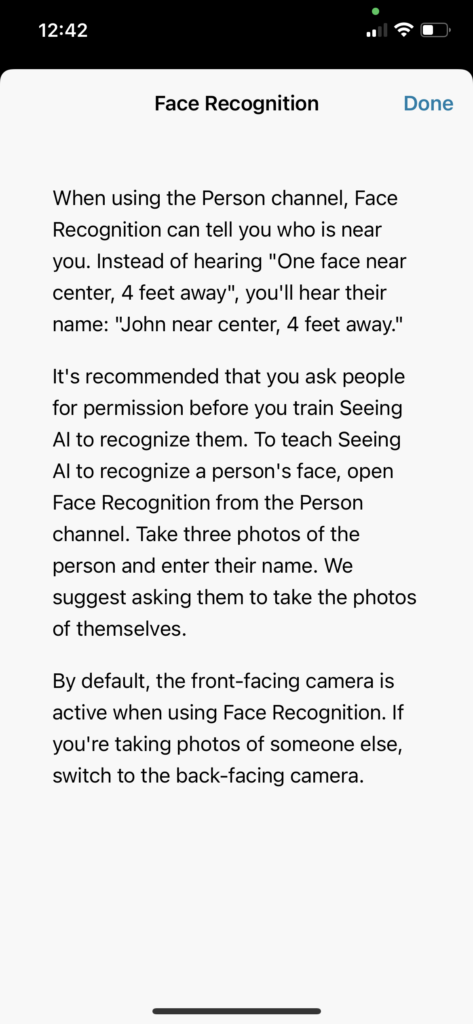

Person Recognition

This mode will scan the faces of people nearby and tell you their relative distance/position to the camera. You can also switch between the forward and backward facing camera, however the camera button is a bit small and would require precision to press which may be difficult if not using VoiceOver. The most interesting feature of this AI is that you can teach your device to recognize people you interact with regularly such as coworkers, family, or friends. You can save people’s names and their corresponding faces so that it will indicate them by name specifically in future settings.

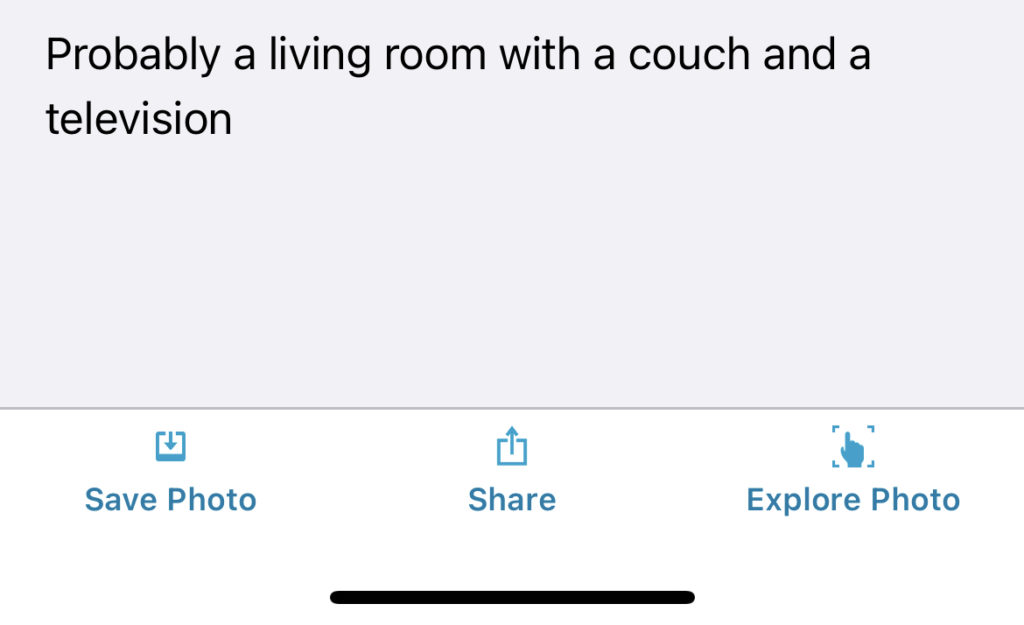

Scenery Recognition

To try scenery mode, you simply need to take the photo and it will populate (vaguely) what objects are in your environment through the use of AI. This mode is still highly experimental in the app, and most likely will need more time before it’s more useful. In the example above, the app does not technically get anything wrong in its scenery description– all those objects are in the environment in the photo. However, it does not tell me what those objects may look like, their size, or where they are in relation to me which highly limits the utility of this mode.

Light Perception

This mode was very accurate and well-designed. The app will emit a pitch based on the amount of light around you. I tested the range of the pitch and the accuracy of the app’s perception by walking from a darker corner of my room towards a lamp. The app predictably began going up in pitch the closer I got to the lamp. Additionally, it seems that additional consideration was used in selecting sounds and frequencies that are both audible (even over background noise such as a loud air conditioner or television set), but not irritating to the user at the highest frequency.

Conclusion

Overall, the general public reception of this app seems to be positive. Its reviews in the app store show that it has been widely used by the blind and low vision community with mostly success. However, it is important to note that some constraints and limitations of the app raised by reviewers are that some modes require assistance from a sighted person to place the object correctly in the frame of the app which impedes the main purpose of many of the modes and frequent frustrations with app glitches/crashes. While many of the experimental modes will still require much more work, user feedback, and time to improve, this app makes strides in utilizing AI for accessibility.