BERRYPICKING A discussion based on Bates’s The Design of Browsing… (1989)

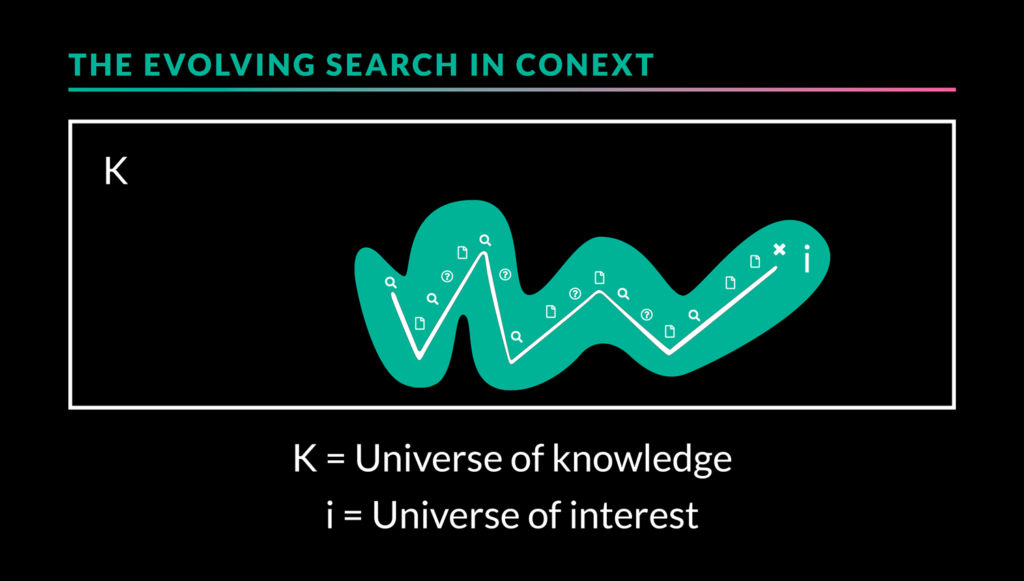

This discussion on berrypicking techniques and how users retrieve information online is heavily based on Marcia Bates’s, The Design of Browsing and Berrypicking Techniques for the Online Search Interface (1989). The berrypicking technique is… well, it refers to a lot of things. First, it’s a model for information retrieval, or how we search and find […]

BERRYPICKING A discussion based on Bates’s The Design of Browsing… (1989) Read More »