Alexander Street Landing Page Redesign (Design Story)

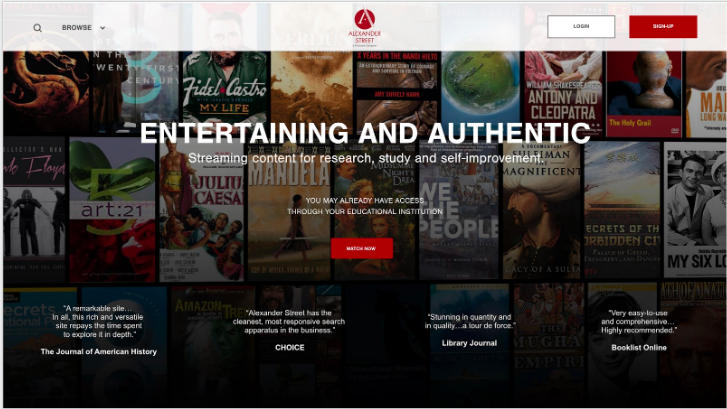

Project Brief: The goal of this project was to ensure that the users of Alexander Street Press, a video and primary source archive for educational programming, were met with the highest possible satisfactory landing page. One that would aide in sign-up statistics and let the users know what it is that Alexander Street has that […]

Alexander Street Landing Page Redesign (Design Story) Read More »