Virtual reality has stubbornly withstood decades of technological limitation and continual market failure to finally reach the threshold of public adoption. It has the honor of predating the first GUI, has successfully captured the public’s imagination and has undergone several iterations of consumer products. With the technology inside our smart phones giving us the opportunity to dive in to VR and experience its potential as a medium, it is becoming more and more important to design interfaces that afford users control. Just as GUI’s afforded novices with an accessible means of interactivity, so too must there be a framework for users to interact naturally with a system in 3D. Specifically, lets take a look at how controllers, both physical and virtual, are designed to make interfaces more usable.

General Design Guidelines

The inherent disorientation of entering a virtual world makes it all the more necessary for users to feel comfortable and in control. There are now common guidelines recommended by many including Google and Oculus which encourage developers to be very deliberate about scale, spatial audio, freedom of movement and visual cues to make users comfortable. But how do you interact?

The Reticle

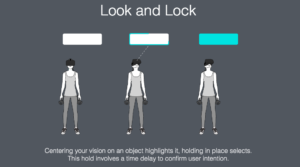

With the incorporation of a small circle in the center of a user’s field of vision, one can simply use the power of the gaze to interact with surroundings. Although there is a somewhat diminished sense of immersion, the ability to simply look at an object and interact with it is natural and satisfying. While some interactions require the user to aim the reticle at an object and click a physical button on the head mounted display (HMD) or controller, other interactions involve “look and lock” or “fuse” buttons. These eliminate the need for a physical button, but also take more time for the user to engage the button.

The usage of the reticle is also the most minimal incarnation of a heads up display (HUD), wherein layers of information and interactions are overlaid on a plane between the user’s vision and the environment, much like the the interface for a first person shooter (FPS) video game.

Physical Controllers

While interactions with the reticle are simple and natural, they simply lack the deep functionality of a physical controller. Some are relatively simple, like the recently released Daydream View which comes with a minimalist two button “magic wand” controller. With this controller, users can point to objects on the screen for basic point and click types of interactivity.

More complex controllers more powerful with multi-button, tracking controllers for both hands. With two controllers, using your hand in tandem opens up the possibilities for gestural functionality, which more closely mirrors the actual manifestation of your hands in VR. A very compelling use of these controllers can be seen with Google’s Tilt Brush application. Click here for a demo of a user showing how the controllers function with the creative app.

VR Buttons

While there are physical buttons on controllers, buttons existing inside of the VR environment might advance far enough to eliminate the need for physical controllers whatsoever. A big challenge for VR buttons is proper feedback and a appropriate feel. One designer devised a button that emulates sensation “akin to to submerging the surface in a liquid to change its state.”

This is one single button and interaction idea that seems to be pleasurable and practical, and which takes advantage of the VR possibility to push through a plane in 3D. Although it offers no feedback physically, and is possibly a bit too skeuomorphic, there is a certain elegance to its design – it asks to be pushed.

Now imagine an array of buttons similar to these that appear when we put on our VR or AR HMDs as parts of our daily lives. There may be a time when our productivity will be closely linked to interfaces displayed our virtual world or augmented world. Instead of a desk with a keyboard, monitor and picture of a deserted island, you have a deeply customizable 3D environment that offers much more interactivity than with our current 2D processes. Designing these interactions and experiences will surely propose difficult challenges to the UX and UI designers of the future.