What is it?

Envision Glasses is an AI-powered smart glasses aid for people with visual impairments to read text, identify people, describe the scene, and navigate. Based on the enterprise edition of Google Glass, Envision Glasses is able to articulate everyday visual information into speech and speak that out to the users.

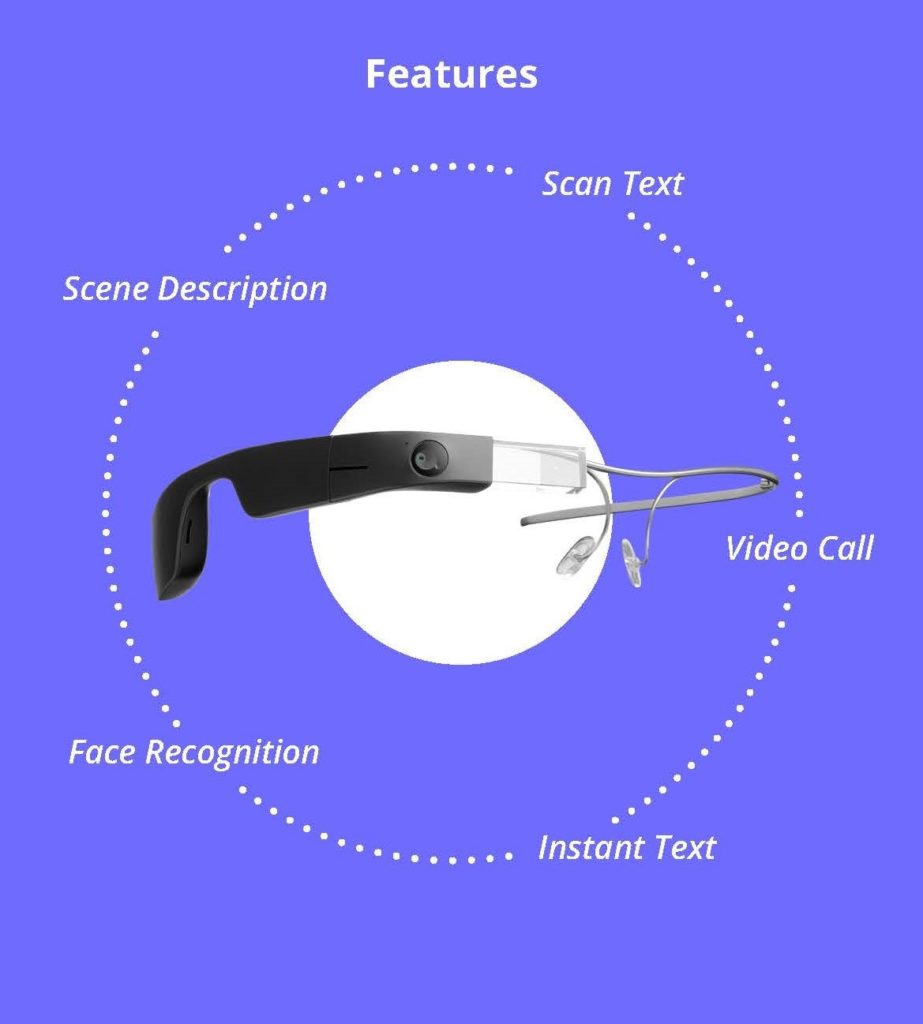

Product Features

The sense of sight is one of the most important organs to absorb information from the environment. However, for visually impaired people, it is hard to gain information in the environment. Most of the time, they need to ask people for help to finish their daily routine, such as recognizing people in front of them, distinguishing the paper cash, and asking for help when there are unexpected obstacles on the road. Therefore, the goal of Envision Glasses is to transfer most of the visual information into auditory to increase the information quantity they can gain to improve the independence of the visually impaired person.

To reach this goal, Envision Glasses present three main features to enhance different types of information.

Feature 1: Read

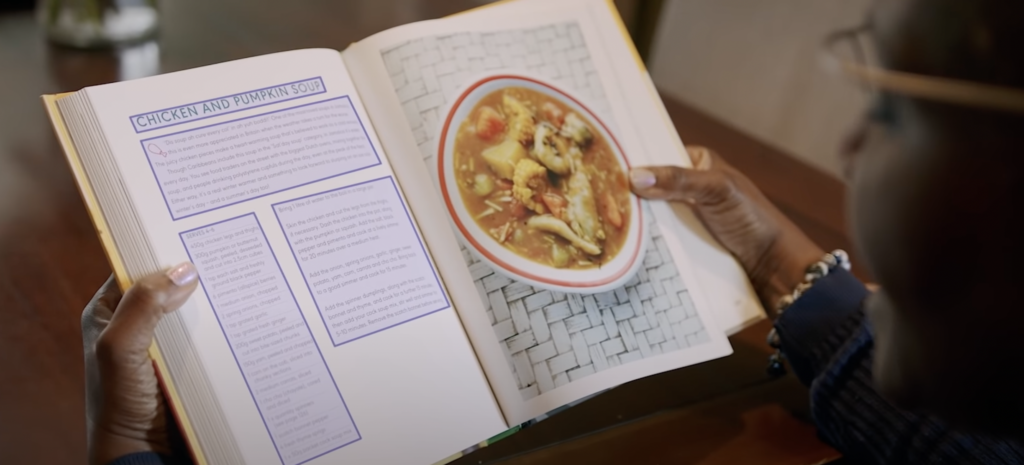

First, the basic feature of Envision Glasses is the text scanning feature. It can read short or long-form text for the users or even hand-written notes, newspapers, and magazines that are in front of you. Moreover, users can use it when wondering what the platform is when taking a train or want to understand the announcement poster on the road. Therefore, with the aid of Envision Glasses, users can read any text, anywhere.

Feature 2: Identify and Find

Second, the text is not the only information that people need to gain in their daily life, but so do the scene, which may include objects that surround the users. Therefore, Envision Glasses present Describe Scene and Explore features allowing users to explore more the world. Which can identify light sources, cash notes, colors, find objects, and even the people you know.

Feature 3: Call for help

Last but not least, Envision Glasses allows users to call friends for help when they face a situation that can not solve by themselves. Although with the aid of Envision Glasses, users can be more independent in dealing with their daily routines. However, accidents could come from anywhere. Therefore, the Envision Ally video call feature makes hands-free video calls to users’ trusted friends and family at any time and anywhere increasing the security in their minds, knowing that always can find someone to help.

Models to View

To evaluate whether those features proposed are hitting the right spot of the need, I will use different models of disability to explain why or how this product is accessible.

Functional solution view of the product.

In this case, the target users of the product are people with visual impairments, which means that lacking or are weak at using vision sense to gain visual information from the world. This definition can strongly connect to the main features of Envision Glasses, the read and identity features. They try to transfer all the visual information into another sense in order to break the “normal norm” that most of the information is gained by visuals. In this product, they transfer the visual information into auditory to allow visually impaired people able to gain more information which is indeed a very successful example of assistive technology. However, is it accessible enough? It may not. Not all visually impaired people do not have a hearing issue or even have some obstacle to raising their hand to trigger the device on the head. Therefore, I believe there still has some room to improve to make it a better product.

Social model view of the product.

Unlike normal glasses we can see on the street, this product is glasses without lenses. This unique design may let users be identified as unique people when wearing this device on the street as well, which may conflict with the willingness of the visually impaired person that they do not want to be “special”. Besides, the unbalance design only offers to right-hand users, due to the control touch screen being only placed on the right side, which totally forgets the group of left-hand users. And the design of using gestures to control every option may also be a problem. This is because most people with visual impairment usually carry a white cane with them all the time, which means one hand is already been occupied. And the other hand need to be free in case of an accident in the environment. Therefore, always asking users to use gestures to control everything may not be a good idea to do so.

In conclusion, Envision Glasses is a very good example of an Assistive Technology product that provides a very impressive functional solution to reach its goal. However, there still have some design detail that needs to consider: the look of the glasses may conflict with the users’ need, and the gesture requirement may not suitable for all users.