Apple’s VoiceOver is a screen reader that enables individuals with visual impairments to navigate their devices through auditory feedback and gesture-based controls. In this blog post, I will be doing a brief analysis of its accessibility features, particularly through the lenses of the functional and social models of disability.

Gesture-Based Control: Usability Meets Accessibility

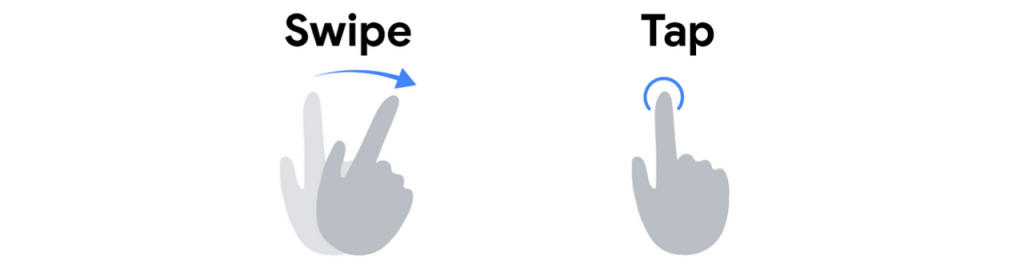

One of the most important features of VoiceOver is its gesture-based control, which allows users to replace traditional visual navigation with simple taps and swipes. I believe this feature offers utility by meeting the needs of users with visual impairments, enabling them to access apps, settings, and content without requiring visual cues. The usability of gesture-based controls is high; for example, users can swipe left or right to move through screen elements, making navigation accessible and efficient.

From a social model perspective, gesture-based control removes environmental barriers by offering an alternative to traditional visual navigation. This feature promotes autonomy, as users can fully participate in digital spaces without needing help from a sighted individual, thus removing the societal barriers that typically limit accessibility.

Screen Recognition: AI Expanding Accessibility

Screen Recognition leverages machine learning to identify and label UI elements in apps that lack accessibility support, greatly enhancing the usability of such third-party apps. This feature really aligns with the Functional Solutions Model by using innovative AI technology to solve the real-world problem of inaccessible apps. While it successfully bridges gaps by making apps on Apple devices usable regardless of design limitations, its effectiveness can vary based on the app’s complexity and how well it integrates with VoiceOver. Some apps may not fully support screen analysis, limiting its functionality in certain cases.

Image Descriptions: Enhancing Inclusivity

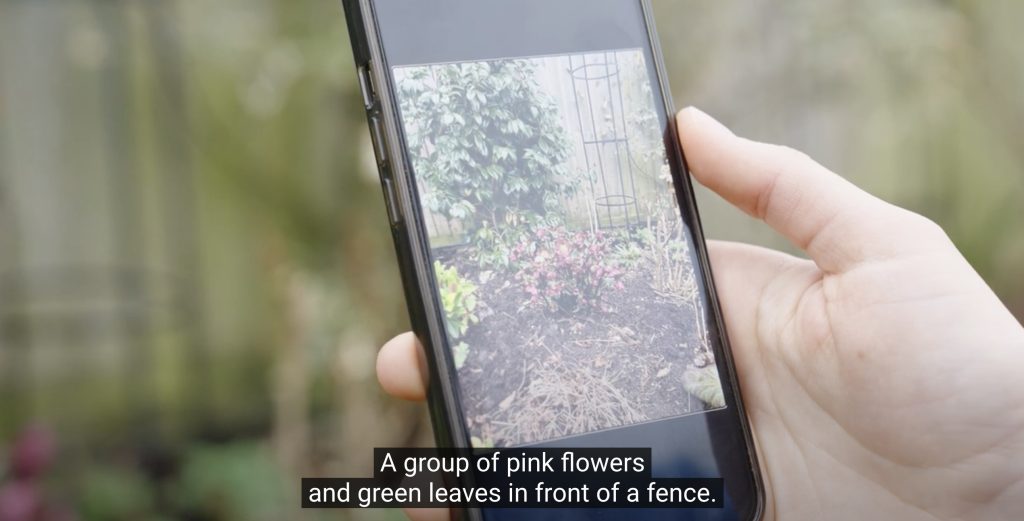

VoiceOver’s Image Descriptions feature provides auditory descriptions of visual content, such as objects, scenes, or people in photos. For instance, when viewing a photo, VoiceOver might narrate, “a group of pink flowers and green leaves in front of a fence.” This feature serves a critical utility by helping users with visual impairments access visual information that is otherwise unavailable.

From an accessibility standpoint, VoiceOver’s Image Descriptions feature enhances inclusivity by enabling visually impaired users to access and engage with visual content easily. By breaking down barriers to information and media consumption, this feature promotes the social model by providing equal opportunity for experiencing and enjoying digital content. It ensures that visually impaired users can fully and independently participate in a previously predominantly visual digital landscape.

By taking a look at these features within the VoiceOver through the functional and social models of disability, it becomes clear that Apple’s VoiceOver is designed to provide practical solutions to real-world accessibility challenges to its users while promoting autonomy and inclusivity.

Useful resources

How to use VoiceOver on iOS devices – a 1-min YouTube video

More of Apple’s accessibility practices

VoiveOver guide for Mac

Apple’s VoiceOver Screen Recognition: Using Machine Learning to Implement Accessibility