Ava is an iOS app that provides real-time transcription of spoken conversation, making verbal communication more accessible for people who are deaf or hard of hearing. Thus, Ava allows verbal interaction to be more accessible.

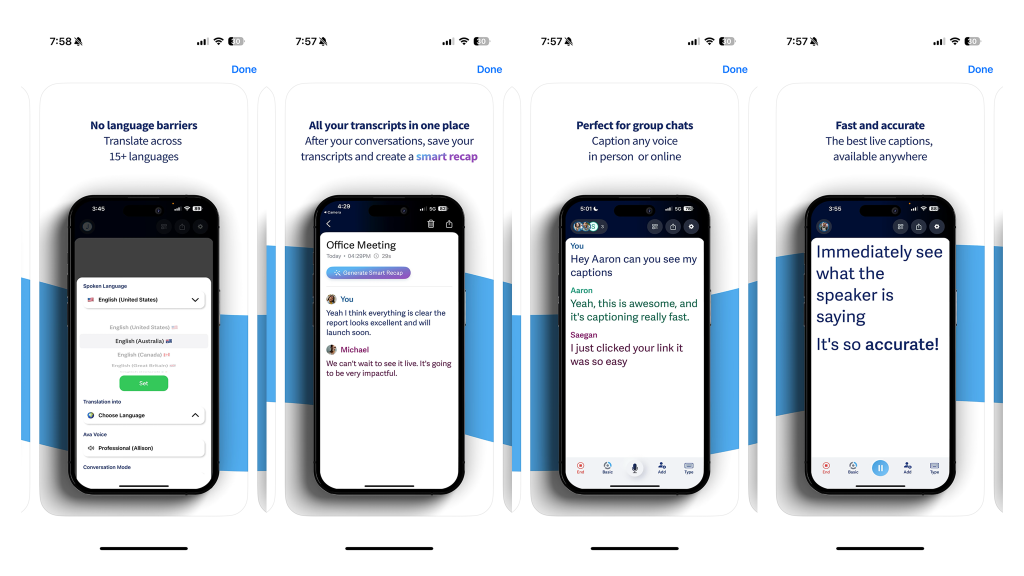

Designed to be easy to use, Ava has a simple and minimal interface that allows users to start captioning quickly. In addition, features like speaker identification and text size adjustments are customizable, which makes it adaptable to different users’ needs. In terms of accessibility, for visual accessibility, it provides clear and large text captions for those with hearing impairments. It is also accessible in multiple languages. Below are two key features of Ava through different models of disability to understand their accessibility strengths and potential limitations.

For the real-time transcription feature, the social model of disability views disability as a result of societal barriers rather than individual impairments. Ava’s real time transcription feature aligns with this model by removing a communication barrier that often isolates deaf or people with hard of hearing. By offering real time transcriptions, Ava enables participation in conversation where sign language interpreters or other accommodations may not be available. The medical model characterizes disability as a condition rooted in biological impairments. While Ava does not “cure” hearing loss, its reliance on AI-driven transcription may be perceived as addressing an individual deficit rather than advocating for broader accessibility initiatives, such as universal captioning solutions.The idea is that rather than relying on third-party apps like Ava, accessibility should be embedded into mainstream technologies as a default feature.

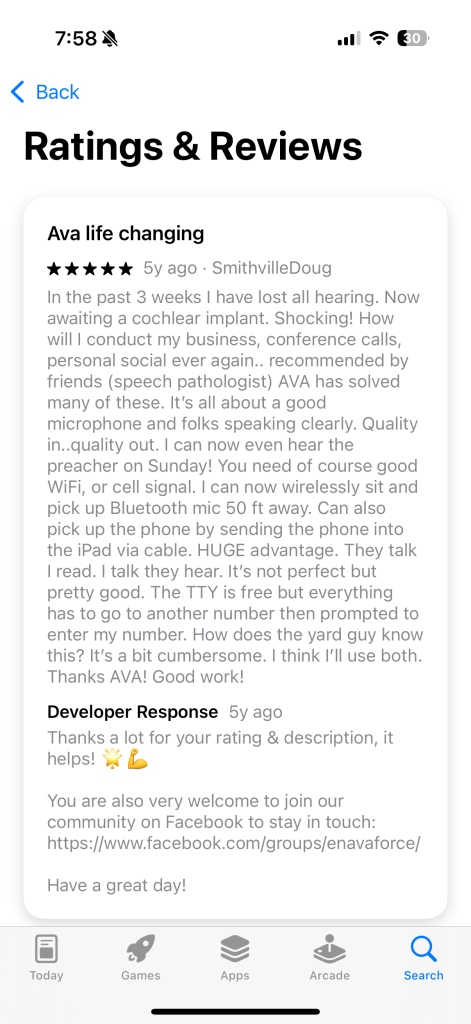

As menitoned above, economic model of disability considers the finanical burden of accessibility solutions. In this multi-speaker premium feature, it requires a preimum paid subscription. While the app itself is innovative, I believe the cost barrier may prevent some users from accessing its full benefits, thus limiting its accessibility to those who can afford it. I believe that there are some limitations to the multi-speaker feature; the identification of the speaker will not always be a 100% accurate. The users would have to rely on the UI, color-coded labels on the app rather than tone of the person speaking. Moreover, for the sign language user the text captions has limitations in capturing the grammar and structure of the sign languages which could potentially create a gap.

In conclusion, Ava exemplifies assistive technology that aligns well with the social and economic models of disability by removing barriers and promoting inclusive communication. However, challenges in accuracy and affordability highlight ongoing issues related to the economic model of disability and reinforce aspects of the medical model, where technology is framed as compensating for impairments rather than transforming environments.