In Fall of 2024, Apple released its first built-in eye tracking feature as part of it’s suite of accessibility tools. This allows iPhone and iPad users with physical or motor disabilities to navigate their devices using only eye movement, without the need for external sensors or accessories. iOS devices are not known for being affordable, but iPhones make up more than half of the US’s mobile device market share and current eye-tracking assistive technology and accessories can cost anywhere from hundreds to even thousands of US dollars. The development of on-device eye-tracking assistive technology is an exciting new feature that could have affordability implications for many physically disabled individuals.

How does the iOS eye tracking feature fit in the models of disability?

As a tool built on top of existing technology to better facilitate access to that existing technology, the new eye-tracking iOS feature most aligns with the functional model of disability. The functional model of disability focuses on creating technology and tools that make the existing world more accessible. It emphasizes developing individualized tools that help people with disabilities interact with their environment more easily. This is in contrast to developments that fall under the social model of disability, where changes are made to the existing environment to provide equitable experiences for all people.

Both these models of disability facilitate tangible changes for people with disabilities (whether motor, vision, hearing, etc), but take different approaches to doing so.

Key Features of iOS Eye Tracking

Gaze Position

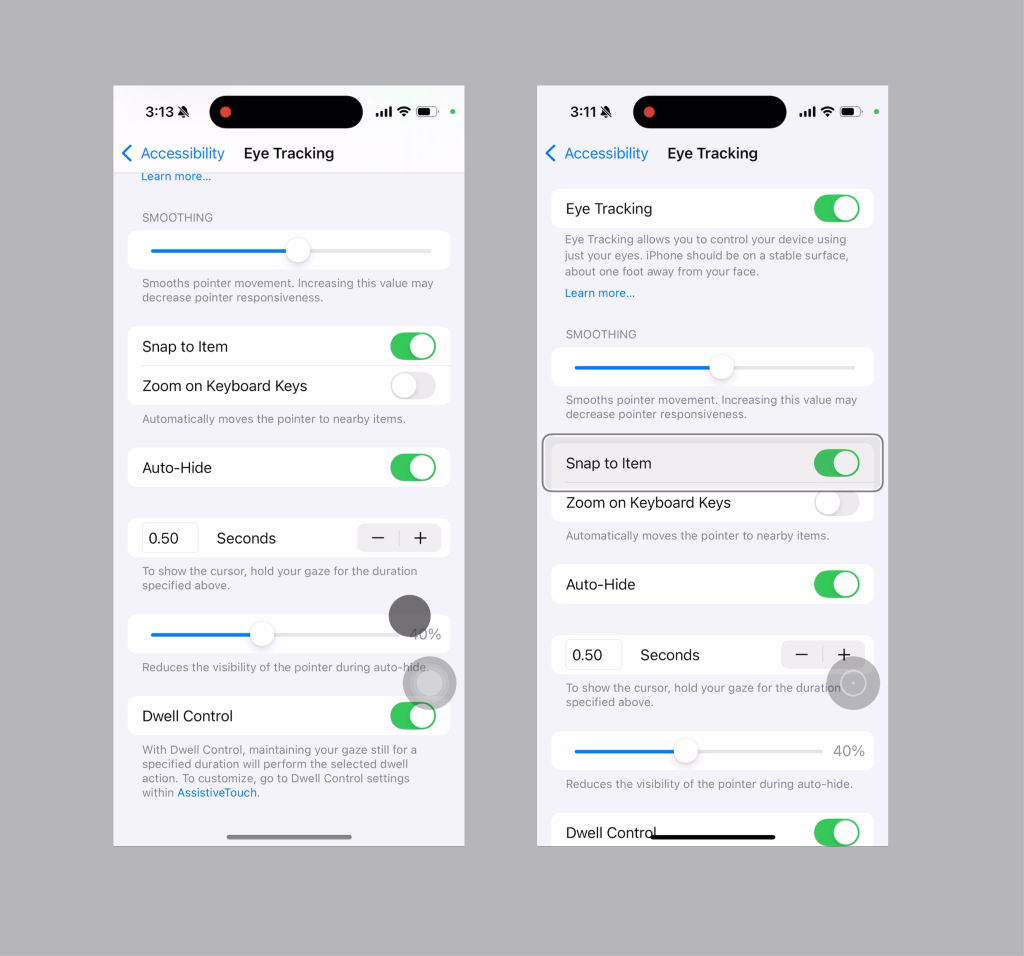

After calibrating, the built-in eye tracking feature tracks the user’s eye movement and displays the users’ gaze position on screen as a large dark grey circle. When a user’s gaze moves over a clickable on-screen item, the item is highlighted by a high-contrast focus ring. These different representations of on-screen gaze position provide users with valuable visual feedback that improves the usability of the overall experience.

Customized Navigation

Navigation is additionally highly customizable. Users can elect to hide the dark grey circle and only show the focus ring, providing users who feel disrupted by the persistent on-screen circle a more focused experience.

The “Snap to Item” feature reduces the burden on the user to be precise with their eye-movements. The high customizability of the built-in iOS eye-tracking tool helps Apple support a breadth of individual needs in ways that feel compatible to individuals’ differing abilities.

Impact So Far

From a preliminary investigation into Reddit’s r/AssistiveTechnology, it seems the iOS eye-tracking is “not quite there yet” and that external tools still provide a smoother and more effective experience for disabled individuals, however the tone is generally optimistic that built-in technology will continue to improve and eventually be more effective.

References

https://backlinko.com/iphone-vs-android-statistics

https://www.reddit.com/r/EyeTracking/comments/1fccb2g/will_apples_eye_tracking_change_everything/