iOS Live Captions is an assistive technology available on iPhone, iPad, and Mac that provides real-time spoken audio transcriptions across various digital and in-person communication formats. Live Captions converts spoken audio into on-screen text, enabling people to follow conversations, phone calls, online videos, and streaming services without relying on interpreters. This feature is particularly beneficial for individuals who are deaf or hard of hearing, allowing them to engage in phone calls, online videos, streaming services, and in-person conversations through real-time generated captions. It is also beneficial for individuals with learning and cognitive disabilities and people in noisy environments who may prefer reading text over listening to audio.

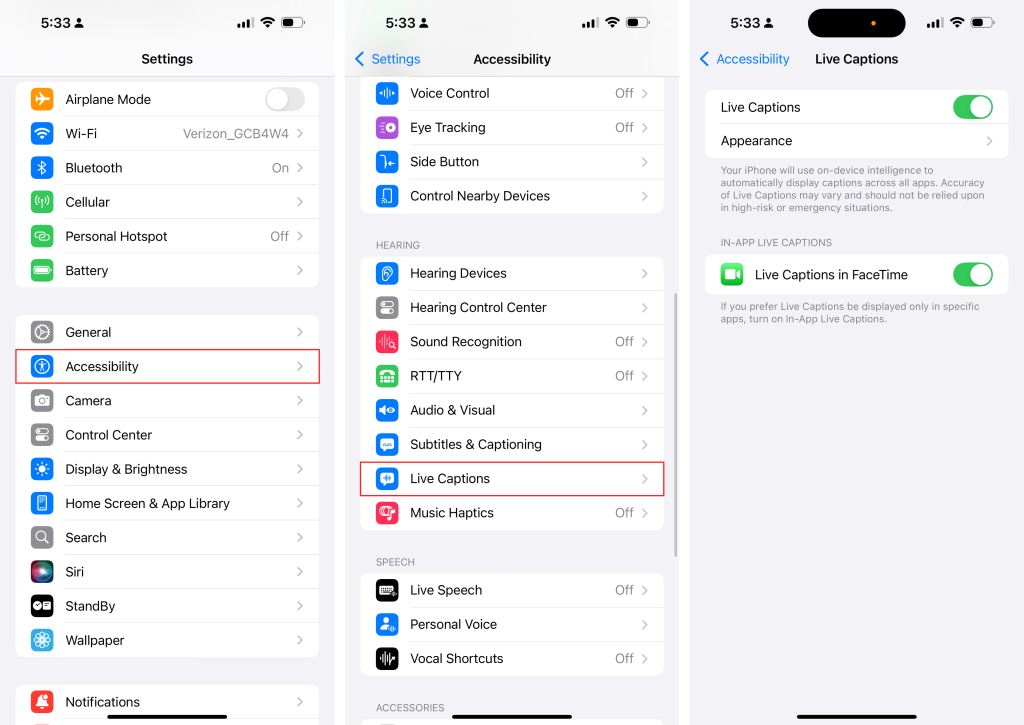

How to Use Live Captions on iPhone

Function 1: Real-Time Captions

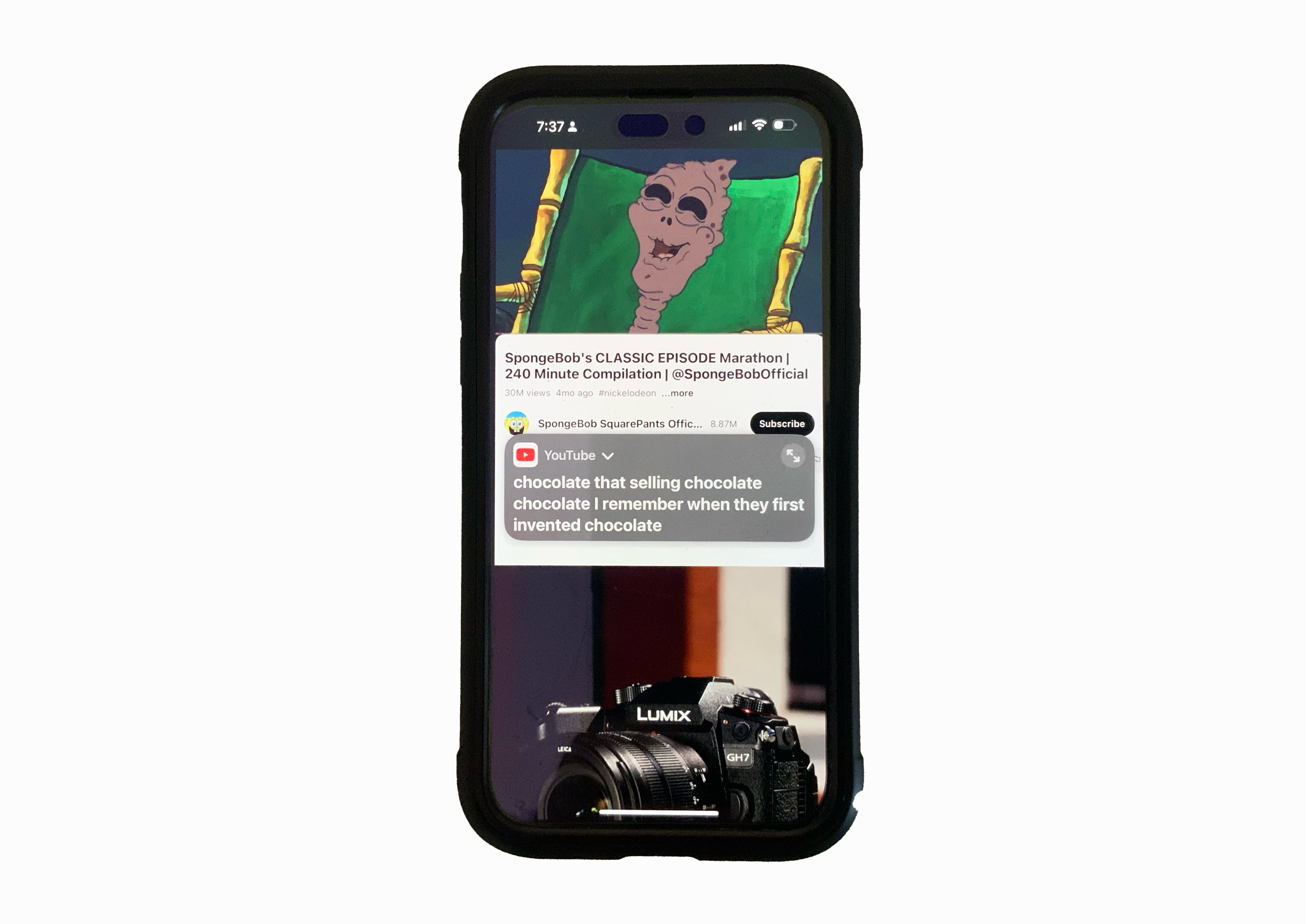

Live Captions provides real-time speech-to-text transcription for various audio sources: such as phone calls, FaceTime, podcasts, music, online videos, and in-person conversations. Live Captions are also compatible with third-party applications outside of Apple’s native apps, such as Zoom, Spotify, and YouTube. Allowing users to follow virtual or in-person conversations, engage in discussions, and access video contents without needing to hear the audio. The floating caption window is adjustable, allowing users to move it anywhere on the screen, expend it for a full transcription, or minimize it when not in use.

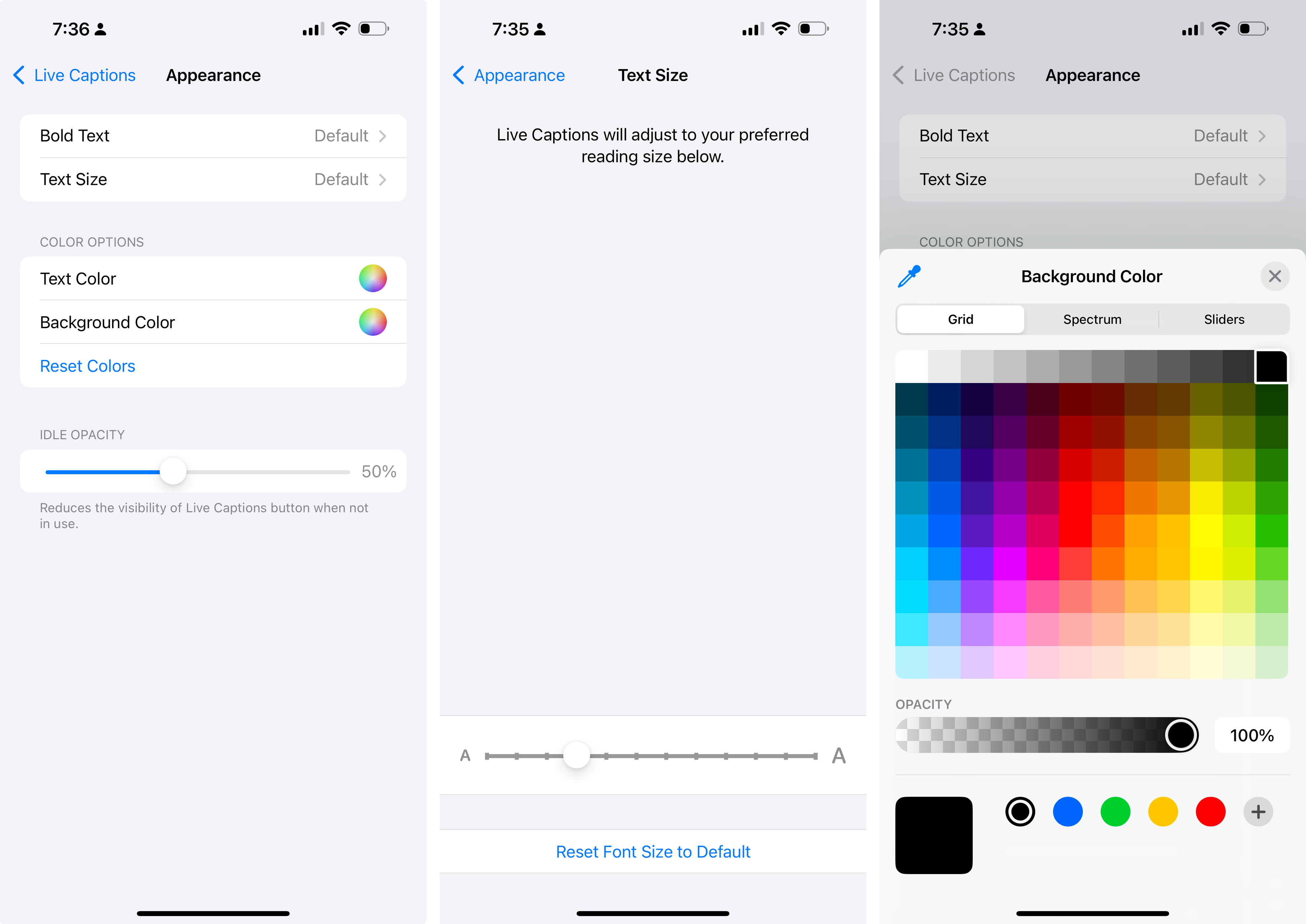

Function 2: Customizable Caption Display for Better Readability

The customization settings allow users to manage the appearance of displayed captions. users can adjust the text size, color, boldness, background color, and opacity per their needs to enhance visual clarity and readability. This customization function not only benefits individuals who are deaf or hard of hearing but also helps non-English speakers and individuals with learning or cognitive disabilities who may benefit from text-based support.

Function 3: Speaker Labels in FaceTime Calls

In FaceTime calls, Live Captions label the speech of all participants to make it easier to identify who said what. This feature is particularly helpful for those who are deaf or hard-of-hearing, helping them to reduce confusion during conversations.

Model of Disability

Function Solutions Model of Disability

The Function Solutions Model of Disability focuses on using innovative technology to eliminate barriers caused by impairments. Traditionally, individuals who are deaf or hard of hearing have to rely on interpreters, third-party captioning services, or pre-written subtitles to access spoken content. Live Captions removes these barriers by providing real-time speech-to-text transcriptions, allowing users to independently participate in phone calls, virtual meetings, streaming services, and in-person conversations without depending on external assistance.

Social Model of Disability

The Social Model of Disability argues that disability is caused by societal barriers rather than their impairment or differences. Live Captions addresses this by integrating real-time captions as a built-in feature across the Apple ecosystem, removing communication barriers for individuals who are deaf or hard of hearing. In FaceTime calls, the labeling speaker function clearly identifying who said what which helps clearly structure the conversation and reduces the confusions for people relying on captions. It makes the overall discussions more organized, accessible, and inclusive.

Limitations of the Current Design

Currently, Live Captions is available only on iPhone 11 and later when the primary language set is English (US) or English (Canada). This restriction makes it inaccessible for multilingual users and users have iPhone Models before 11 relying on captions for communication. Additionally, users cannot record, store, or screenshot transcriptions, limiting their ability to reference the transcription later. According to Apple Supprt, accuracy may also on children’s voices or with strong accents, which can impact usability in diverse environments.

To enhance inclusivity and usability for diverse communities, expanding language support beyond English (US and Canada) would make Live Captions accessible to a wider audience. Enabling screenshotting or recording of transcripts would allow users to save for future reference, improving accessibility for those who want to review in the future. Integrating build-in translation function allows real-time captions to be translated into different languages, benefiting multilingual users and enhancing global communication.

References

https://support.apple.com/guide/iphone/get-live-captions-of-spoken-audio-iphe0990f7bb/ios

https://www.cnbc.com/2022/05/17/apple-is-adding-live-captions-to-iphone-ipad-and-mac.html