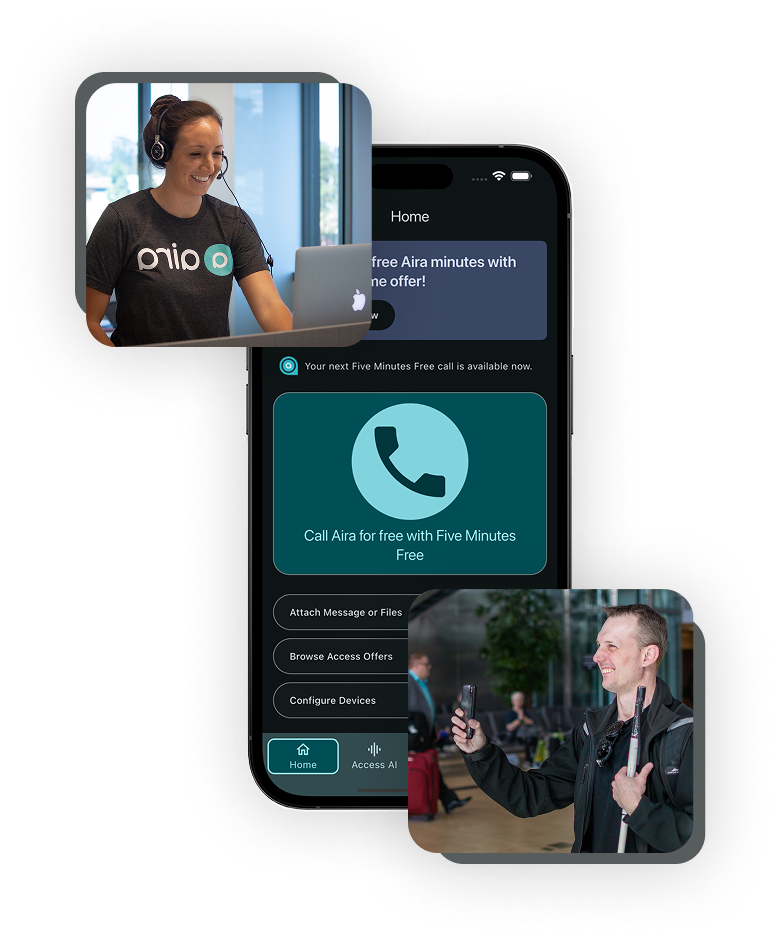

Aira allows a person who is blind or has low vision to tap a button and instantly connect with a trained interpreter who describes surroundings, reads text, assists with navigation, and supports online tasks. There is no need for advance scheduling, and the service is available 24/7/365.

Feature 1: 24/7 visual interpreting

A central feature of Aira Explorer is its instant connection to a professional visual interpreter. A person who is blind or has low vision can connect to a trained agent who uses the phone’s camera feed to describe surroundings, read printed text, assist with navigation, interpret digital displays, or help complete online tasks. The service does not require advance scheduling and operates 24/7/365, allowing users to initiate support at any time.

This feature strongly reflects the social model of disability, which defines disability as the result of societal and environmental barriers rather than individual impairments. In stores, airports and restaurants, information is often communicated exclusively through sight so rather than positioning blindness as the problem, Aira highlights how these environments are designed without multimodal access in mind. Real-time visual interpreting acts as a functional bridge, filling gaps created by inaccessible design.

At a surface level, live interpretation could be interpreted through the medical model, which frames disability as an individual deficit requiring compensation. In this framing, Aira might appear to “replace” missing visual input with auditory information. However, unlike technologies that aim to correct or cure impairment, Aira does not attempt to “fix” blindness. Instead, it provides a tool that allows users to navigate a world built primarily for sighted people, reinforcing autonomy and control. The user directs the interaction, determining what to look at, what to read, and when to disconnect.

Feature 2: Access AI

Access AI is a free feature within Aira Explorer that allows users to take or upload an image and receive an immediate AI-generated description. Users can ask follow-up questions about the image and, if desired, verify the response with a human visual interpreter. Reviews suggest that users use it to read menus, interpret signs, Check different spaces and labels on things, check digital thermostats, identify colours and clothing and understanding printed materials.

Unlike the live interpreter feature, Access AI also introduces automation into the accessibility landscape. While AI increases efficiency and privacy allowing a blind person to check their outfit or read a label independently, it also raises questions about reliability and bias. The option to verify descriptions with a human interpreter acknowledges the limitations of AI.

Additional Thoughts

The service runs on a subscription-based model, with plans ranging from about $26 per month to $1,160 per month, and there are nine different plan options. At first glance, that upper range feels extremely expensive. However, compared to many other visual assistive technologies we discussed in class, such as smart glasses or specialized hardware that cost thousands of dollars upfront, Aira feels relatively more accessible because it works through a smartphone and offers flexible pricing tiers.

In addition, Aira has partnered with companies such as Starbucks, Google, Walmart, and Target, where users can access the service for free within their stores. I think this partnership model is especially interesting because it shifts some responsibility onto corporations rather than placing the entire financial burden on individuals who are blind or have low vision. In that sense, accessibility becomes partially embedded into public spaces instead of being treated as a personal expense.

Another aspect I personally found compelling is the autonomy provided by Access AI. I appreciate how the feature allows users to independently take a photo and receive a description without always having to initiate a live call. In more sensitive situations, such as checking for stains on clothing during menstruation or identifying menstrual products based on packaging, this kind of tool could increase privacy and reduce the need to involve someone else. While people who are blind have many strategies for navigating menstruation and bodily awareness, certain aspects of visual confirmation can still require assistance in highly visual environments. However, I am also aware that AI-generated descriptions are not always fully accurate, and I am unsure how advanced or reliable the model currently is in identifying subtle details like stains or color variations.

Overall, Aira Explorer feels like a step toward more flexible and adaptable assistive technology. It is not perfect, and cost remains a factor, but its corporate partnerships and integration of AI create a model that feels more inclusive than many high-cost, hardware-based alternatives.