In a world that primarily uses sight as a tool to function, simple everyday tasks become a task for visually impaired users. This is where technology can be incorporated to reduce friction. Be My Eyes is one such mobile application that helps visually impaired users discern their surroundings better in everyday situations.

How the App Works

As technology becomes increasingly predominant in everyday life, leveraging digital interfaces as potential solutions becomes practical. It is also important to make sure that users are not entirely dependent on technology alone while providing a sense of empowerment. Be My Eyes balances this by primarily focusing on integrating human support with digital accessibility. It uses a digital interface as a solution, while also focusing on human involvement. Allowing users to connect with volunteers via video calls to help them recognise objects or guide them through everyday tasks, it bridges the gap between self empowerment and positive assistance.

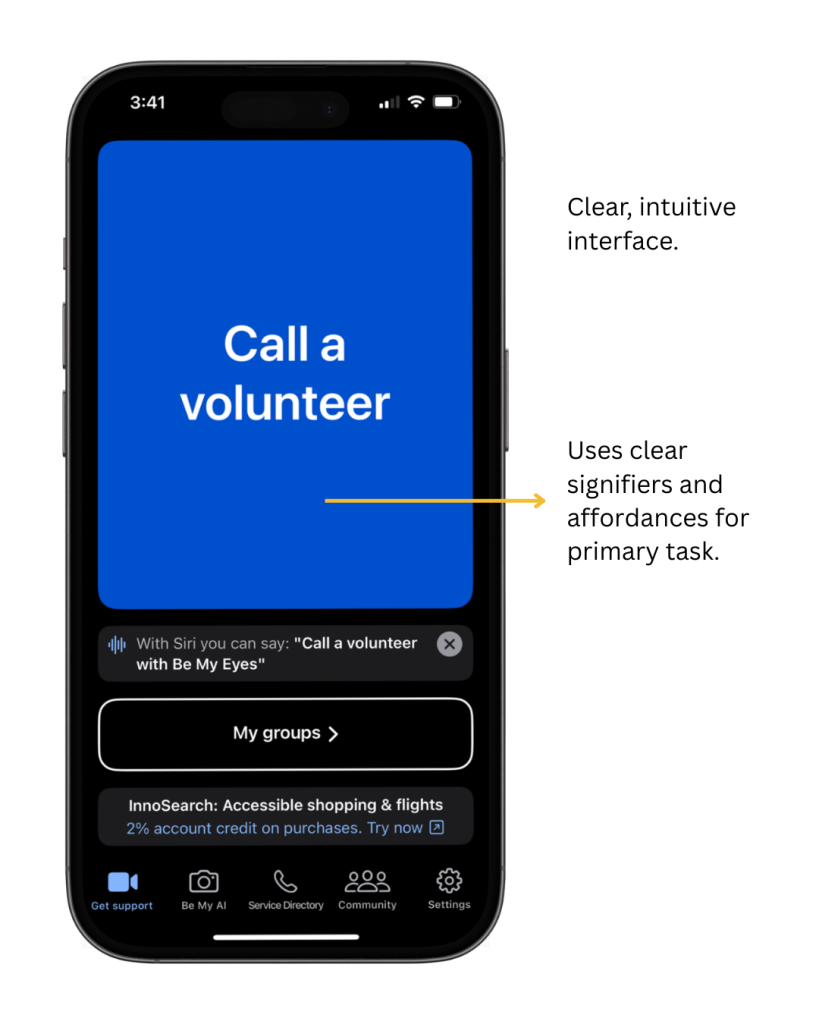

The app uses a minimal, intuitive interface that uses clear affordances and signifiers, reducing cognitive load and efficiency in task completion. Voice-based interaction allows users to complete the primary task of connecting with a volunteer, ensuring immediate accessibility in times of needs.

The app also includes a feature called Be My AI that provides visual descriptions of the user’s surroundings with the help of AI, providing instant answers in seconds, further empowering users.

Looking at it through the different accessibility models

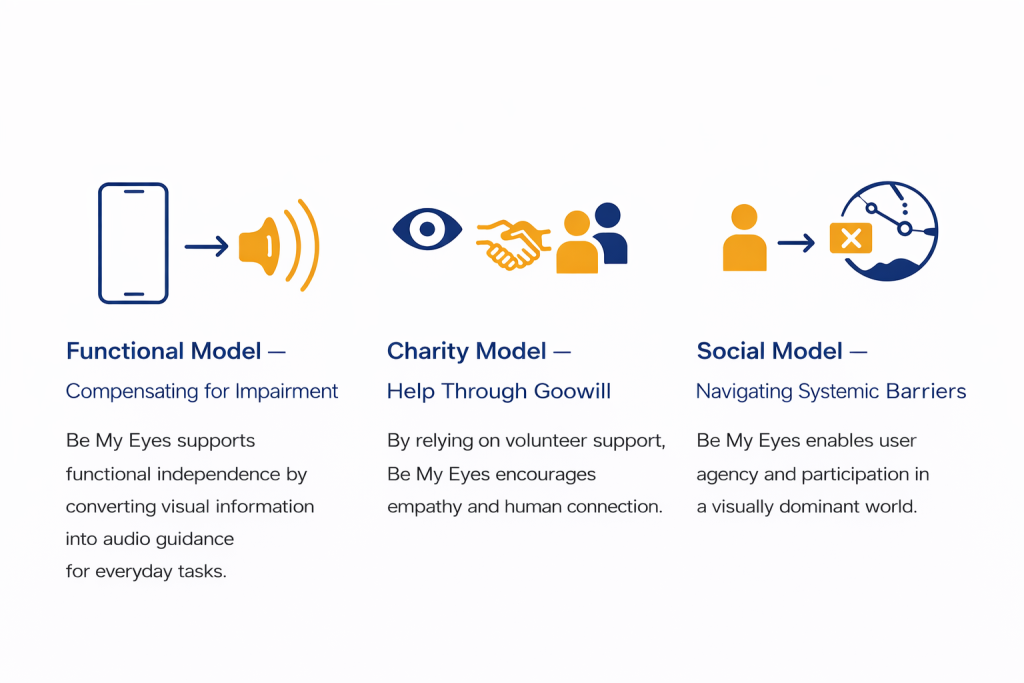

Be My Eyes supports multiple models of accessibility and disability through its unique human-centered approach, namely the Social, Functional and Charity Models. Rather than aligning with a single perspective, it meets at an intersection of multiple models, communicating both strengths and weaknesses.

The app aligns most strongly with the Functional Models, using minimal technology and requiring no other tools or equipment beyond a smartphone to operate. The use of audio guidance for major tasks and integration of AI-assisted visual interpretation converts visual inputs into audio outputs and increases functional independence in users by completing everyday tasks like reading labels, identifying objects, etc.

While it encourages empathy, relying on volunteer support out of kindness, it can unintentionally reinforce a power imbalance, merging with the Charity Model. Disabled users become passive recipients of help, depending on the availability and goodwill of others rather than it being an inherent right.

The app lastly relies heavily on the Social Model, empowering users with agency within existing systems. By initiating the interactions themselves and looking for assistance only when genuinely required, it gives users control. The app enables user participation in a visually dominant world, but acts more as a quick fix rather than eliminating inaccessible design or changing the system.

Conclusion

Taken together, Be My Eyes demonstrates how assistive technology can bridge gaps in accessibility while also highlighting the need for systems to change. It supports inclusion within existing systems, emphasizing the importance of designing environments that do not require assistance to be accessible in the first place.

References: