Link to blog: https://ixd.prattsi.org/2018/10/good-robot-bad-robot-the-ethics-of-ai/

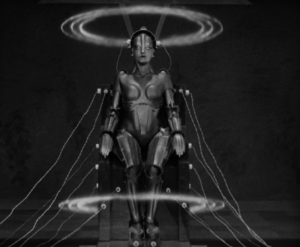

Introduction: The ethically-minded have always weighed benefit and risk when it comes to AI becoming more prevalent in everyday life. The topic has been picked apart in everything from pulpy sci-fi novels from the 50s to well-regarded popular scientific journals of today, where ever-increasing human-like AI are now commonplace.

This blog post will explore modern viewpoints pertaining to the ethics of artificial intelligence.

Keywords: ethics, usability, usability theory, AI, robots, INFO644-02, LIS644-02

I examined three different sources for my second blog post. The first is a viewpoint on the topic of artificial intelligence from Nature. The first author, Stewart Russell, discusses the implication of creating autonomous weapons systems and describing their implications in the real world. Russell describes the following scenario, writing “the technology already demonstrated for self-driving cars, together with the humanlike tactical control learned by DeepMind’s DQN system, could support urban search-and-destroy missions” (415).

Russell describes a domino effect – if one country adopts these methods in warfare, others will follow, creating a more ruthless or at least caustic international climate. Russell then cites the Geneva Convention, which are agreed-upon laws that govern what is allowed in international warfare. Russell writes of “military necessity; discrimination between combatants and non-combatants; and proportionality between the value of the military objective and the potential for collateral damage” (416).

The author is keen, in my opinion, noting how carefully-worded the language here is. “Necessity” can really mean anything. As could a phrase like “potential for collateral damage.” Anyone can argue that something can lead to something much worse, so it seems that world leaders are always teetering this line of what is and isn’t justified.

We think of this often when it comes to chemical warfare and “world-ending” buttons, but more rarely is the concept applied to AI.

Russell concludes with a call for collaborative research between relevant professionals and action even from the public. Ethics experts should work with scientists and normal people should be consulted in the form of town hall-type settings as well. To do nothing and let countries become laxer with incorporating crueler forms of AI technology in warfare is the worst response, according to Russell.

My second source was web content from the London School of Economics and Political Science by Mona Sloane entitled “Making artificial intelligence socially just: why the current focus on ethics is not enough.” Sloane makes a similar call to Russell, echoing the notion that people within many fields should come together for meaningful dialogue and better resolutions around the issue. Sloane’s issue is not with AI in a military setting, though; the beef here is with how AI can play out in another way among world powers: How do ethics in AI play out in an economic landscape?

Sloane describes an inter-connected weave of public and private funding for advancements in “Ethics Research in AI.” Everyone from whole countries to corporations like Facebook invest in the testing done to fix everything from malfunctions in driverless cars to making Facebook algorithms more inclusionary and less prone to the spreading of false information.

Sloane advocates for a super critical look at AI, and is adamant that no one be marginalized by AI on a broad scale, also emphasizing the importance of “data transparency” between user and interface. Themes of “technology, data, and society” were stressed by the author as needing to be bridged in order to meet the need for critical examination of what ethics in AI looks like in the modern world.

My final source is a conference presentation that the authors turned into a paper, describing, as the previous ones I’ve examined, how ubiquitous AI is, but panning the need for AI to be critically examined through an ethical lens.

The article begins broadly, by listing philosophical tenets surrounding ethics, which are numbered below:

- “1. Consequentialist ethics: an agent is ethical if and only if it weighs the consequences of each choice and chooses the option which has the most moral outcomes. It is also known as utilitarian ethics as the resulting decisions often aim to produce the best aggregate consequences.

- Deontological ethics: an agent is ethical if and only if it respects obligations, duties and rights related to given situations. Agents with deontological ethics (also known as duty ethics or obligation ethics) act in accordance to established social norms.

- Virtue ethics: an agent is ethical if and only if it acts and thinks according to some moral values (e.g. bravery, justice, etc.). Agents with virtue ethics should exhibit an inner drive to be perceived favorably by others.”

These are general in that they all apply to being a good person, but they are also relevant in dealing with the murky water of AI. The conference authors list, like Russell, a multitude of areas that this touches; unique in context with the others is a discussion on cryptocurrencies like Bitcoin.

Having a digital currency is something that would have been hard to perceive of growing up. But if you think about it, now there is basically an AI-tinged version of everything. Applying these themes to definitions people had of AI in the 1950s – a Bluetooth in your ear would qualify under that eras definition. We take for granted the ways we interact with AI, becoming less scared of advancements as they infiltrate our lives, always worrying that the next advancement might bring about some metaphorical dropping-of-the-other-shoe. Calls to action expressed by the authors are necessary, but I have confidence that humans will figure it out like we always have.

Works Cited

- http://blogs.lse.ac.uk/politicsandpolicy/artificial-intelligence-and-society-ethics/

- https://www.ijcai.org/proceedings/2018/0779.pdf

- 28 May 2015, Nature Magazine, Vol. 521. “Ethics of Artificial Intelligence,” Russell, Stewart. 415-17.