Around 250 million people around the globe are estimated to have atypical or non-standard speech (individuals who may experience difficulty making their words understood). Project Relate is an Android beta application built by Google, which uses machine learning to help individuals with non-standard speech communicate more effectively in daily face-to-face interactions.

The advancements in the Artificial Intelligence in the area of automatic speech recognition has enabled speech-to-speech conversion. The Speech and Research teams at Google has leveraged this technology to develop a mobile application which aims to use AI to help individuals communicate easily. Over a million speech samples were recorded by participants to train the speech recognition model. The app primarily offers three features: Listen, Repeat and Assistant.

Utility

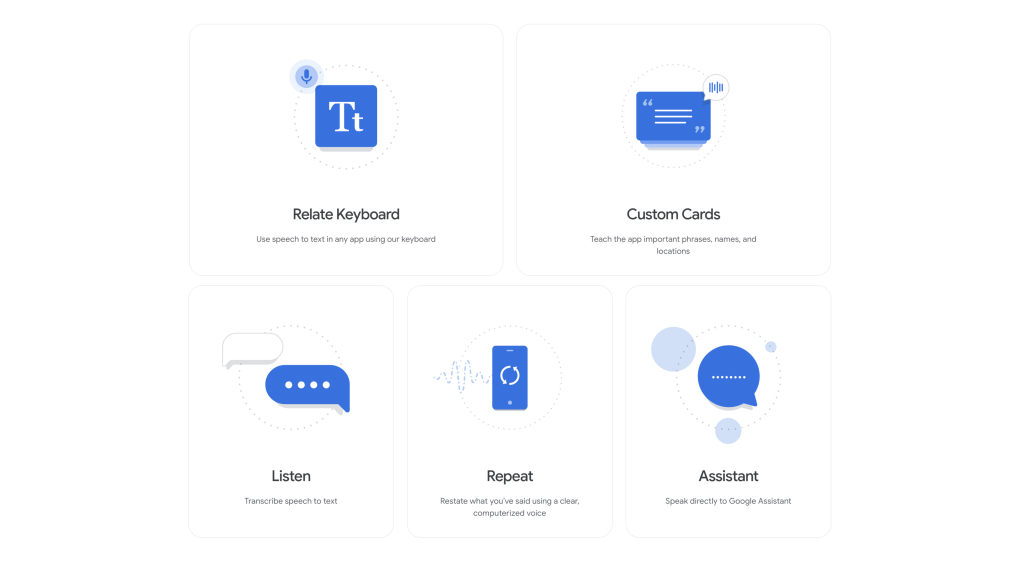

The application provides utility to its users by focusing on 5 main features. The 4 features mentioned below facilitates individuals with non-standard speech to engage in interactions both face-to-face and via texts.

- Relate Keyboard: It uses personalised speech recognition to convert speech to text in any app universally using the Relate Keyboard

- Listen: It transcribes speech to text in the app, so it can serve as live “closed captioning” for you

- Repeat: It restates what you’ve said in a computerized voice, which helps in face-to-face interactions

- Assistant: It speaks directly to the Google Assistant within the app to perform actions like setting an alarm, playing a song or asking for directions, with ease

The fifth feature facilitates the learning of the application to make it as personalised as possible for an individual

- Custom Cards helps users to teach the app important phrases, names and locations to make the speech recognition personalised.

These features primarily align with the functional solutions model of disability as they assist the individuals with non-standard speech in all aspects of communication using their mobile phones.

Additionally, The idea behind the application resonates with the social model of disability and recognises speech impairment as a barrier in the basic human requirement: communication, especially on the currently designed AI models. It strives to include various kinds of speech to make speech recognition universal.

Usability & Accessibility

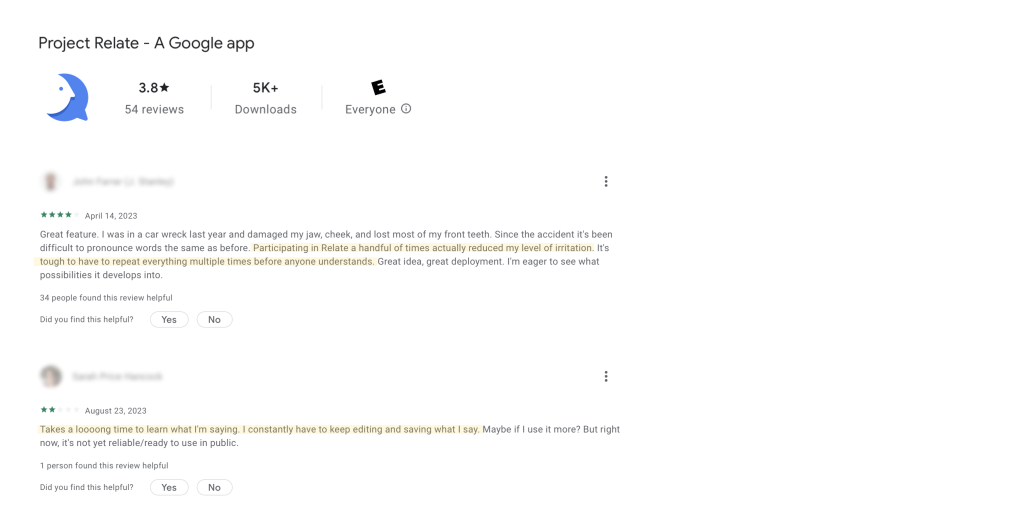

The application is well-designed keeping its usability at its centre. The features of the application is easy to use and provides streamlines workflows. The user interface follows the functional model of disability with its large buttons with contrasting colors.

Desirability

The utility, accessibility and most of the aspects of its usability have made the product desirable and proved to be of help to some of its users. While the others have showed concerns on its reliability as the application “takes a lot of time to learn” what they’re saying.

Affordability & Compatibility

Currently the application compatibility is limited to Android users as the speech recognition model training is being implied on the Google Assistant. The application is in beta phase and is free to use for its users.

Viability

The researchers have recorded over a million speech samples of participants with non-standard speech and used machine learning to train the speech recognition model. With their beta version, they aim to collect many more samples from their beta users by asking them to record 500 phrases to make the speech recognition personalised. Hopefully, with more speech samples and support from its users the application could be functioning standalone, without the need of individuals recording 500 phrases.

Conclusion

In my opinion, the scope of Project Relate is beyond the mobile application. It further can be used to enhance the Google Assistant experience universally. Furthermore, the speech-to-speech conversion could be utilised in messaging apps to minimise barriers.

References

- https://blog.google/outreach-initiatives/accessibility/project-relate/

- https://sites.research.google/relate/

- https://play.google.com/store/apps/details?id=com.google.research.projectrelate&hl=en_US&gl=US

- https://www.youtube.com/watch?v=e6z5rEgoqnI

- https://twitter.com/GoogleIndia/status/1604741537065492480