In today’s world, adapting to specialized needs and empowering one to engage with their surroundings effortlessly has become possible (with considerable room for improvement) thanks to assistive technology. These assistive tools, software, or devices bridge the gaps across various models of disabilities. Building on the wave of accessibility, Apple’s Live Speech aims to break down barriers by enabling individuals with speech disabilities or communication challenges to participate through real-time text-to-speech during phone, FaceTime, or in-person conversations. Furthermore, Personal Voice, a Live Speech extension launched in 2023 as a part of the iOS 17 update, lets users train AI to speak in their voice adding another layer of personalization to assistive technology.

“At the end of the day, the most important thing is being able to communicate with friends and family”

~ Philip Green, board member and ALS advocate at the Team Gleason nonprofit, who has experienced significant changes to his voice since receiving his ALS diagnosis in 2018.

Utility

Integrating accessibility features as a part of a mobile operating system creates a more equitable and inclusive environment allowing users to perform day-to-day activities seamlessly. Imagine an educational setting where a student with a speech disability or atypical/ non-standard speech can actively participate in class discussions by using Live Speech to express their thoughts independently. Think about workplaces where they can confidently contribute to meetings and presentations. Drawing from similar examples, these features resonate well with the economic, biopsychosocial, and functional solutions model of disability as they assist individuals with non-standard speech to interact with their environment using a standard device.

Apple’s Live Speech boasts four main features that cater to diverse needs and preferences:

- Pre-recorded Phrases: This feature allows users to record and utilize frequently used phrases instead of typing them every time, thereby saving users time and energy.

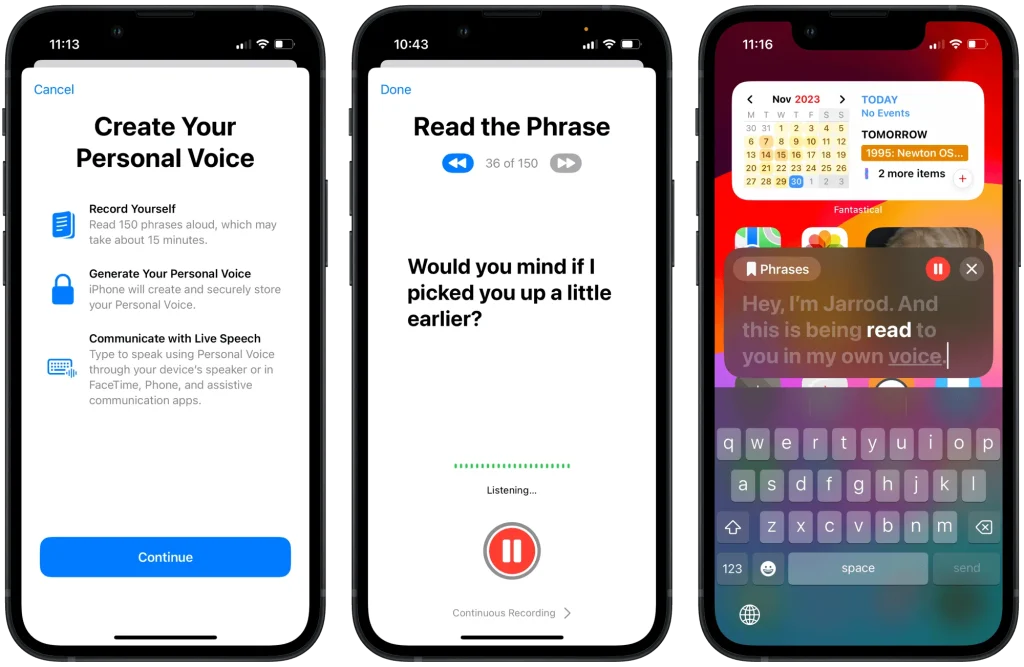

- Personal Voice: This AI-based feature, introduced in iOS 17, allows users to create a voice that sounds remarkably like their own. Apple claims that this feature prompts users to read phrases (around 150) equalling about 15 minutes of speech to train its AI to mimic their voice. For users at risk of losing their ability to speak — such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability — Personal Voice is a simple way to give them a sense of control and create a voice that sounds like them.

“If you can tell them you love them, in a voice that sounds like you, it makes all the difference in the world — and being able to create your synthetic voice on your iPhone in just 15 minutes is extraordinary.”

~ Philip Green

- Multilingual Support: Live Speech currently supports over 20 languages, ensuring inclusivity for individuals from various backgrounds and regions. This breaks down communication barriers and allows users to thrive in their native languages.

- On-Device Processing: Apple claims the use of on-device machine learning and processing of information to keep users’ voice-based information private and secure.

Usability and Accessibility

One can easily locate and turn on the Live Speech feature in the iOS accessibility settings (mobile, tablet, or smartwatch). Once turned on, users can simply click on the power button thrice to activate a text prompt that enables users to input text and obtain speech as an output. The best part about this feature lies in its ease of use and personalization options in terms of adding their personal voice, frequently used phrases, and the option to select from over 20 languages/ dialects.

In terms of accessibility, while helpful for individuals with speech-related disabilities, this feature primarily relies on audio output, potentially excluding people with hearing impairments. Furthermore, to extend the functionality of this feature for people with visual impairments, the text input can be connected to an on-screen braille feature integrated in iOS or an external physical braille keyboard.

Desirability

The desirability of Live Speech and Personal Voice is multifaceted. For individuals with speech disabilities, these features offer immense benefits when it comes to overcoming challenges highlighted in the economic and biopsychosocial model of disability. For other users, the value proposition primarily lies in the multi-lingual aspect thus making it desirable to all as rightly pointed out by the Curb-cut effect which states that “When we design for disabilities, we design for all.”

Viability

Both Live Speech and Personal Voice offer promising solutions promoting enhanced accessibility. The AI model’s ability to mimic the user’s voice with sheer 15 minutes of sample phrases is surely impressive. Although Apple’s accessibility features are commendable and do not have a cost associated to these additional settings, the devices themselves carry a premium price tag. Furthermore, extended viability and development of these features can surround the following points of improvement:

- Integrating less-robotic/ natural sounding and non-stereotypical speech options.

- Improving accuracy challenges that come up while recording training data for the Personal voice feature (caused due to varied accents or background noise).

- Address privacy concerns and mitigate potential misuse.

Overall, the Live speech and Personal voice accessibility feature demonstrates high utility, addressing the challenges faced by those who struggle with voicing their thoughts confidently, great accessibility, and compatibility for people with hearing/ visual impairments, enabling users to interact with others using a smart mobile device without the need for additional software or equipment.

References

https://9to5mac.com/2023/06/10/apple-personal-voice-creation-feature-ios-17/

https://lifehacker.com/these-are-all-the-new-accessibility-features-in-ios-17-1850911314